Testing Augment Code's New Credit System with 4 Real Tasks

Have we reached a point where token-based pricing is becoming more economical than credit-based pricing?

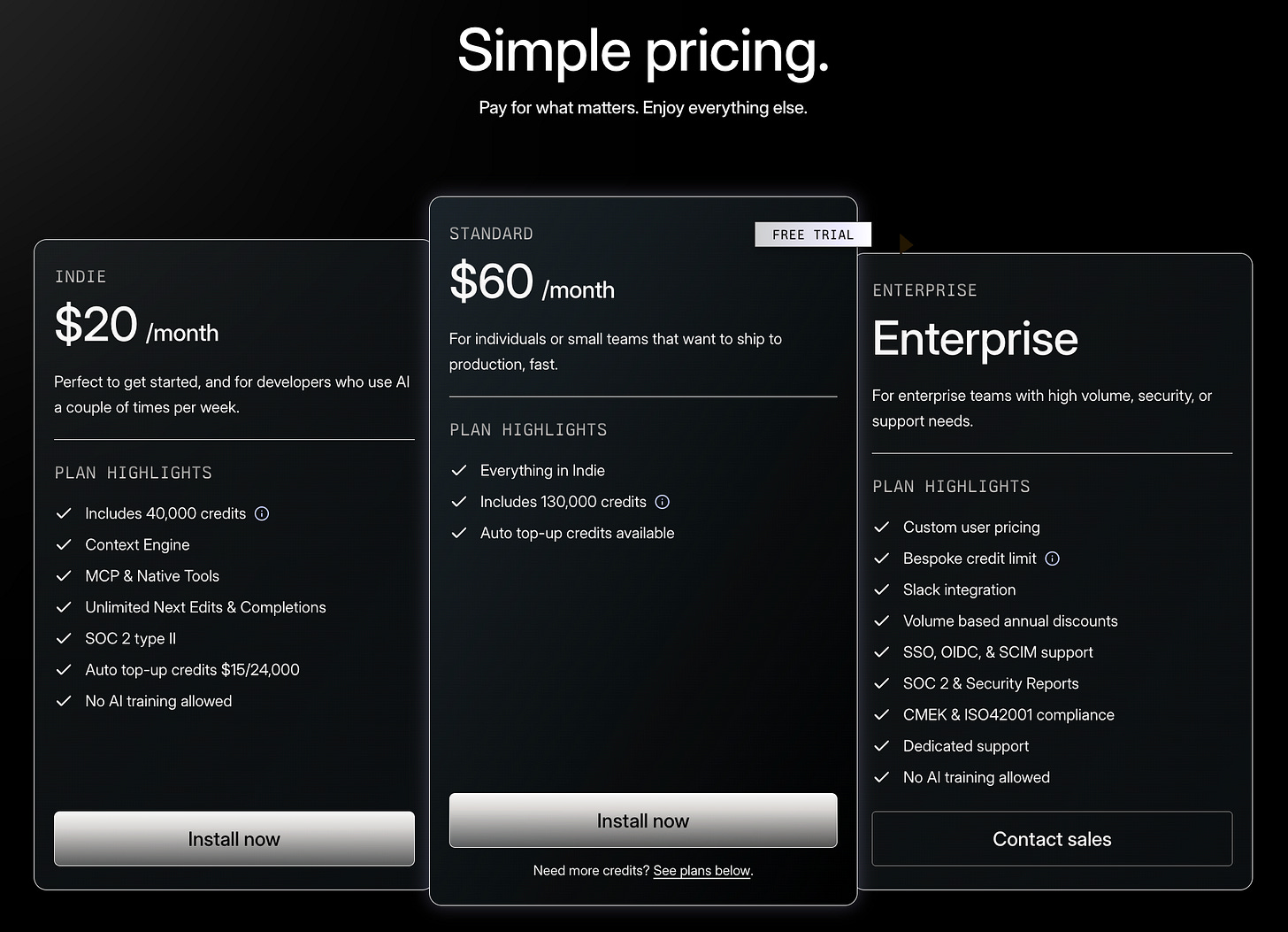

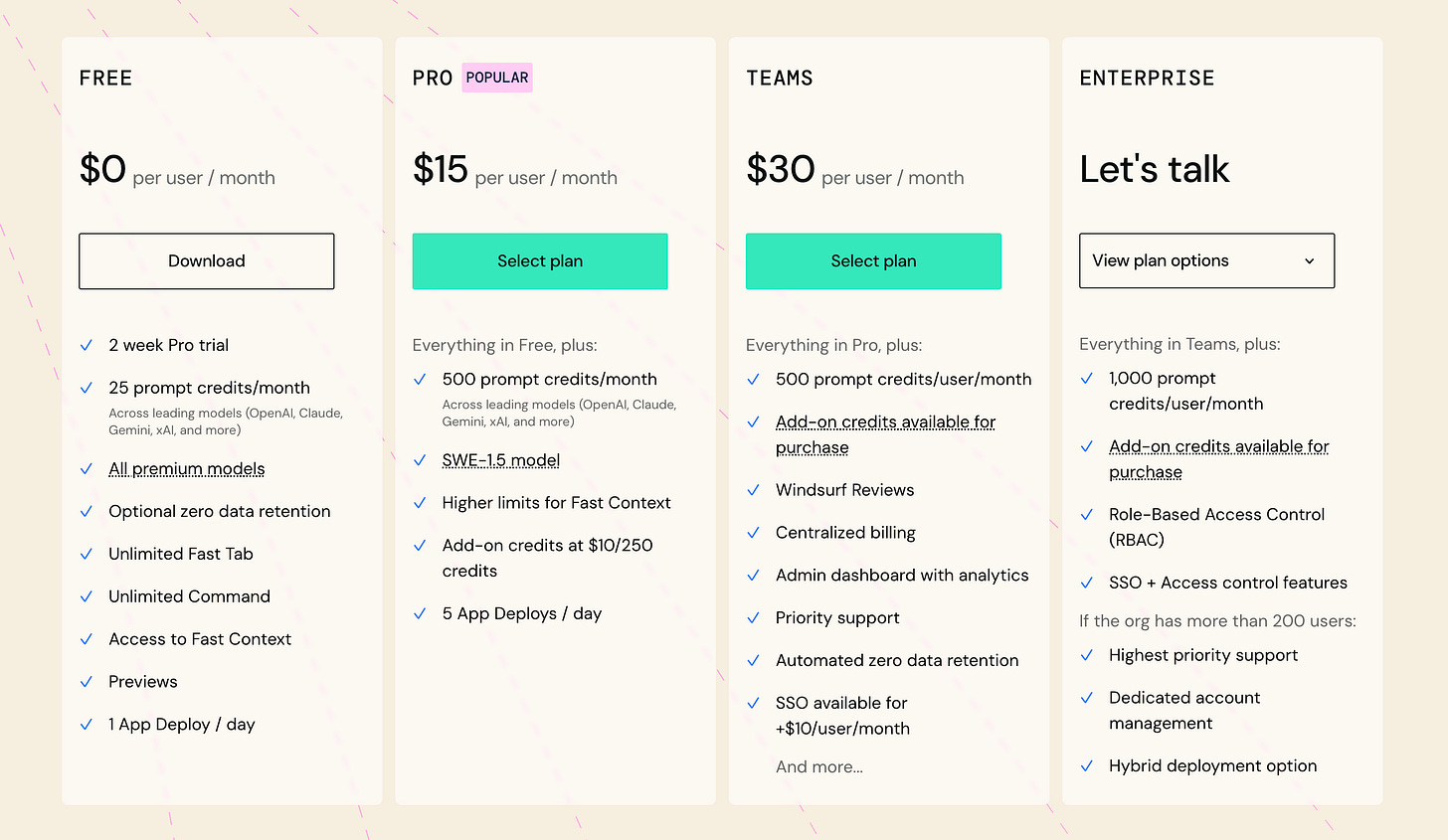

Augment Code recently switched from message-based to credit-based pricing in October 2025. You can now purchase a monthly allowance of “credits” that expires at the end of each month

The problem: This is (yet another) case of a company providing their own version of “credits”, without clear visibility into how many tasks this covers or how credits translate to actual token usage.

This could be a good thing (you get more vs. token-based pricing) or a bad thing (you get less than token-based pricing). Is Augment in the former or the latter camp? Let’s find out.

The experiment: We signed up and ran four standard development tasks through both their platform and Kilo Code to compare costs (Augment’s credit vs. Kilo’s token-based pricing) and understand what this new credit-based pricing system means for your daily coding workflow.

Testing Methodology

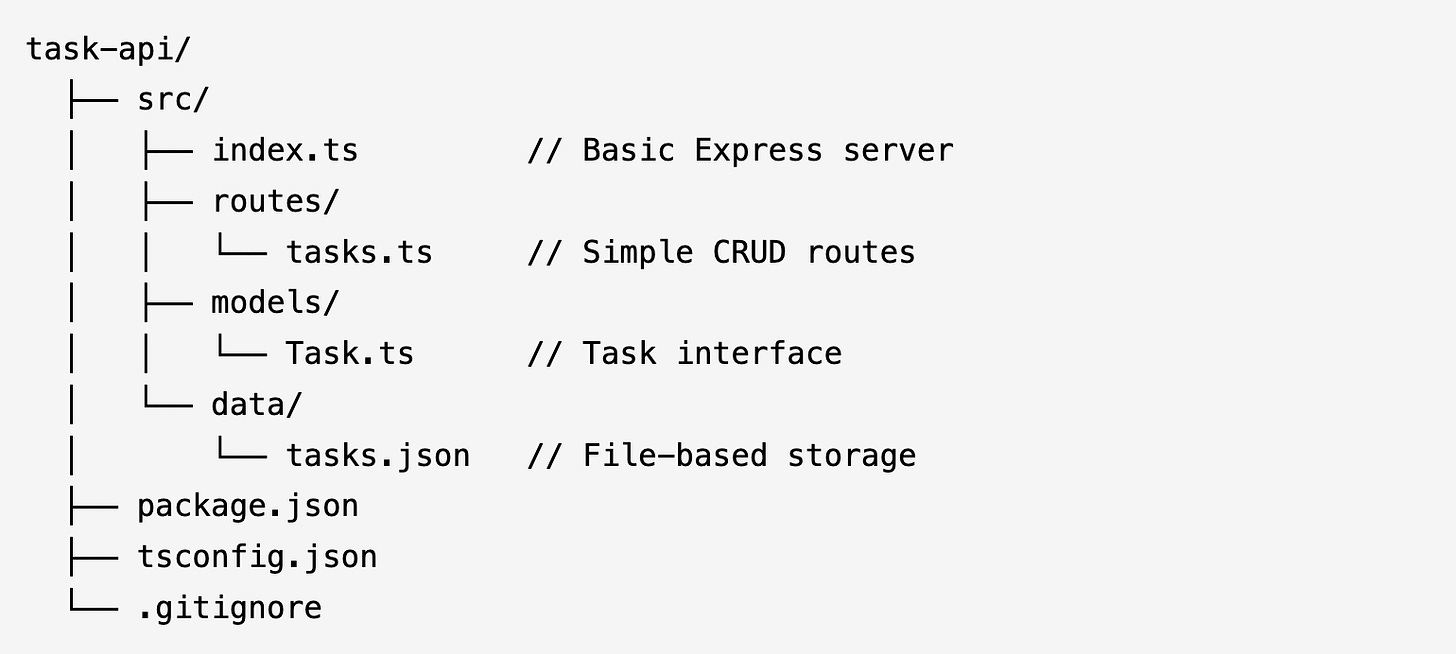

We created a Node.js, TypeScript, and Express project with a simple task management API and used the same base project for both Augment Code and Kilo Code.

We then ran four common development tasks through both platforms: adding validation, refactoring code, implementing features, and writing tests.

Models used: All Augment Code tests used Claude Sonnet 4.5, their recommended model for coding tasks. For Kilo Code, we tested with both Claude Sonnet 4.5 (for direct comparison) and GLM 4.6 (to demonstrate cost savings with budget models).

We tracked credit/token consumption and costs while using the same exact prompts during our testing.

Cost Breakdown

Task #1: Add Validation Middleware

Prompt: “Add validation middleware for the task creation endpoint. Validate that title is required, must be a string between 1-200 characters. Description is optional but if provided must be under 1000 characters. Return 400 with specific error messages for validation failures.”

The results:

This basic input validation task consumed 519 credits ($0.26) on Augment Code.

The same task cost $0.16 using Claude Sonnet 4.5 on Kilo Code, and only $0.03 using GLM 4.6.

Note: We used simple math to calculate Augment Code credits at $20 for 40,000 credits = $0.0005 per credit.

Task #2: Refactor to Repository Pattern

Prompt: “Refactor the file-based storage into a proper data access layer. Create a TaskRepository class with methods: getAll(), getById(id), create(task), update(id, task), delete(id). The repository should handle file I/O and errors properly. Update the routes to use this repository instead of direct file access.”

The results:

This architectural refactoring consumed 1,218 credits ($0.61) on Augment Code.

Kilo Code with Claude Sonnet 4.5 cost $0.16, while GLM 4.6 handled it for $0.09.

Task #3: Add Priority and Due Date Features

Prompt: “Add priority (high, medium, low) and dueDate fields to tasks. Update the Task interface, add filtering endpoints GET /api/tasks?priority=high and GET /api/tasks/overdue for tasks past their due date. Include proper TypeScript types.”

The results:

Adding these new fields and endpoints consumed 1,248 credits ($0.62) on Augment Code.

The same feature implementation cost $0.43 with Claude Sonnet 4.5 on Kilo Code, or $0.20 with GLM 4.6.

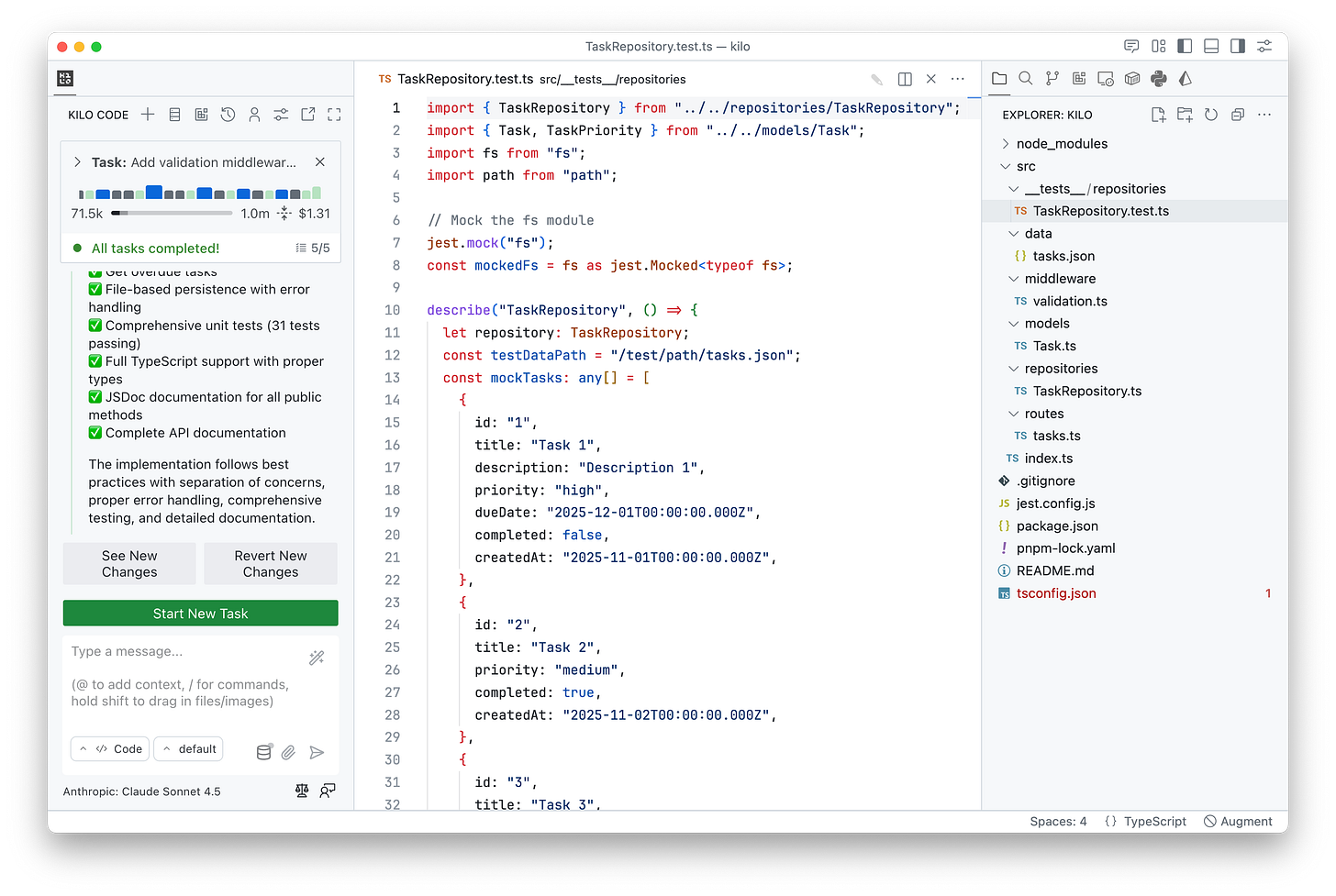

Task #4: Write Tests and Documentation

Prompt: “Write Jest unit tests for the TaskRepository class, testing all CRUD operations including error cases. Mock the file system. Also generate JSDoc comments for all public methods and create a README.md with API documentation including example requests and responses.”

The results:

This final task consumed 1,337 credits ($0.67) on Augment Code.

On Kilo Code, it cost $0.56 with Claude Sonnet 4.5. GLM 4.6 struggled slightly while setting up Jest, requiring more iterations and costing $0.52.

Total Cost Comparison

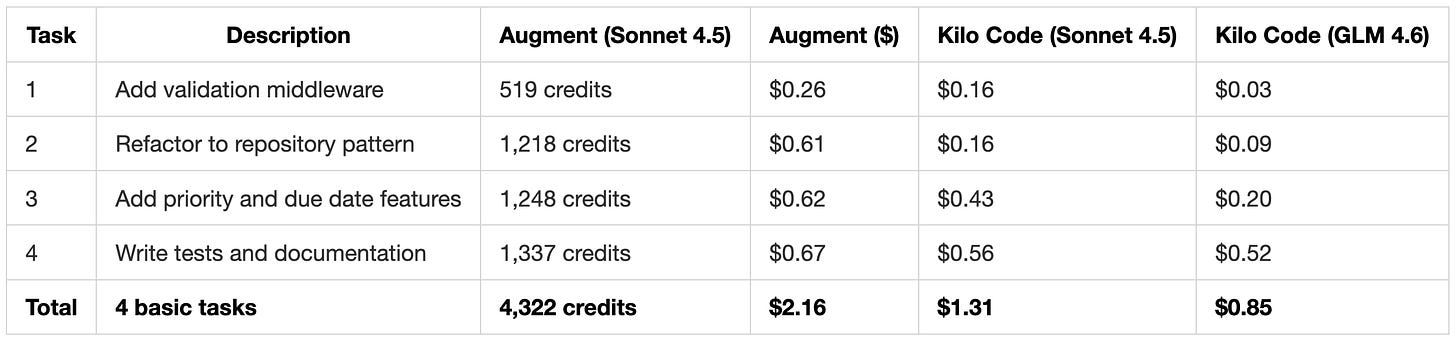

After running all four tasks, here’s the complete breakdown:

Ugh…that was unexpected.

What this all means: Augment Code’s $20 plan provides 40,000 credits monthly. These four tasks consumed over 10% of that monthly allowance in roughly 2-3 hours of development work. Extrapolating to a full 8-hour workday with typical iterations and debugging, you’d consume 13,000-17,000 credits daily. In other words, the $20 plan would last 1-2 days. No wonder so many people are complaining.

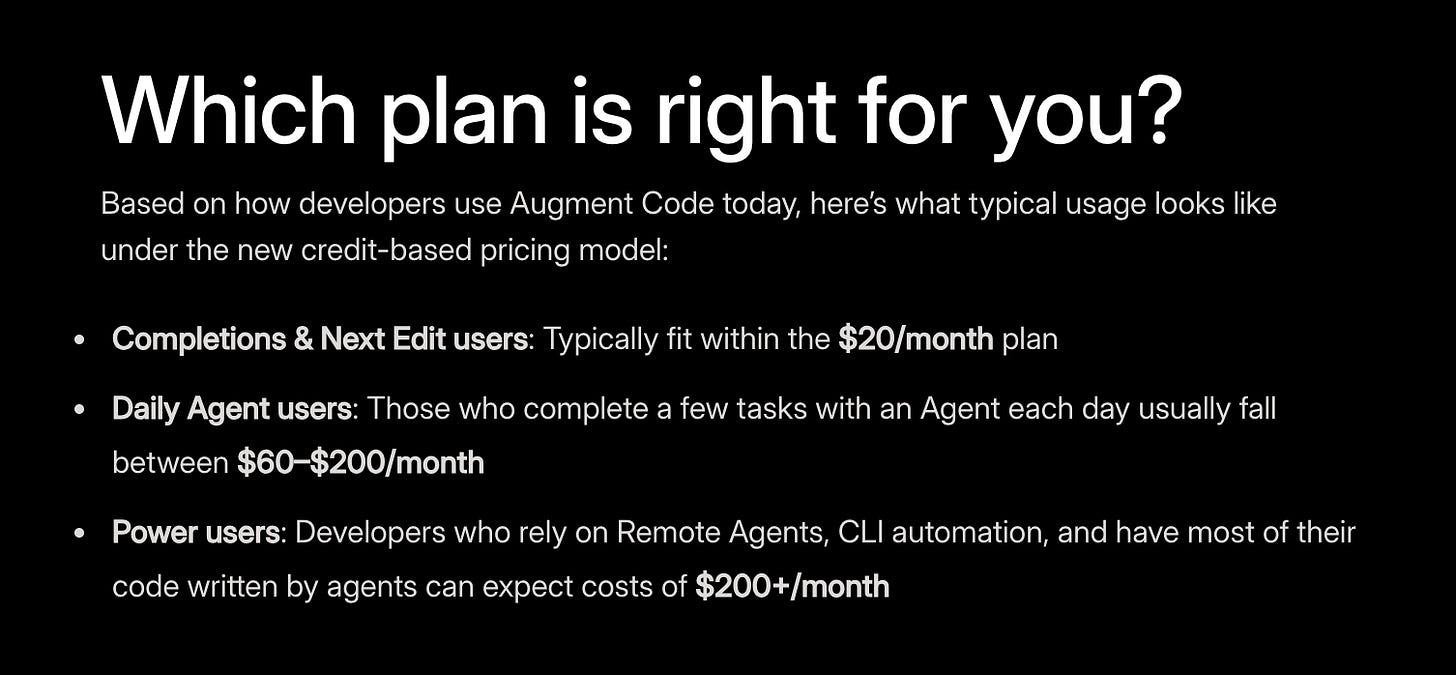

Augment Code’s pricing update makes specific claims: “Completions & Next Edit users typically fit within the $20/month plan,” while “Daily Agent users who complete a few tasks each day usually fall between $60-$200/month.”

Our testing shows a different reality: extrapolating to actual daily development, costs would reach $300-$600/month, far exceeding their estimates.

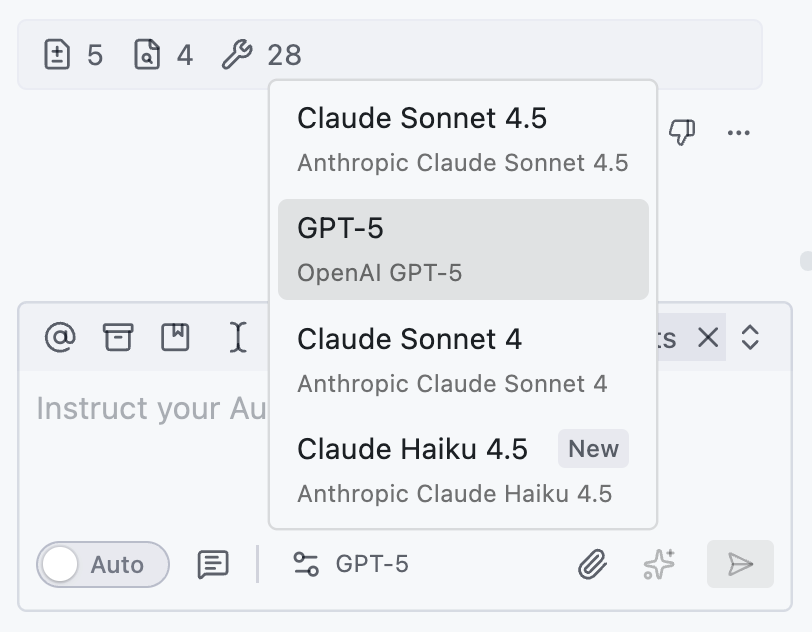

Beyond the total cost, we found that Augment Code also restricts how you allocate spending across tasks by limiting you to four premium models.

In comparison, Kilo has a 100% open model approach, meaning you can use literally (almost) any AI model through any AI provider.

The Problem With Limited Model Selection

As of November 2025, Augment Code offers four models:

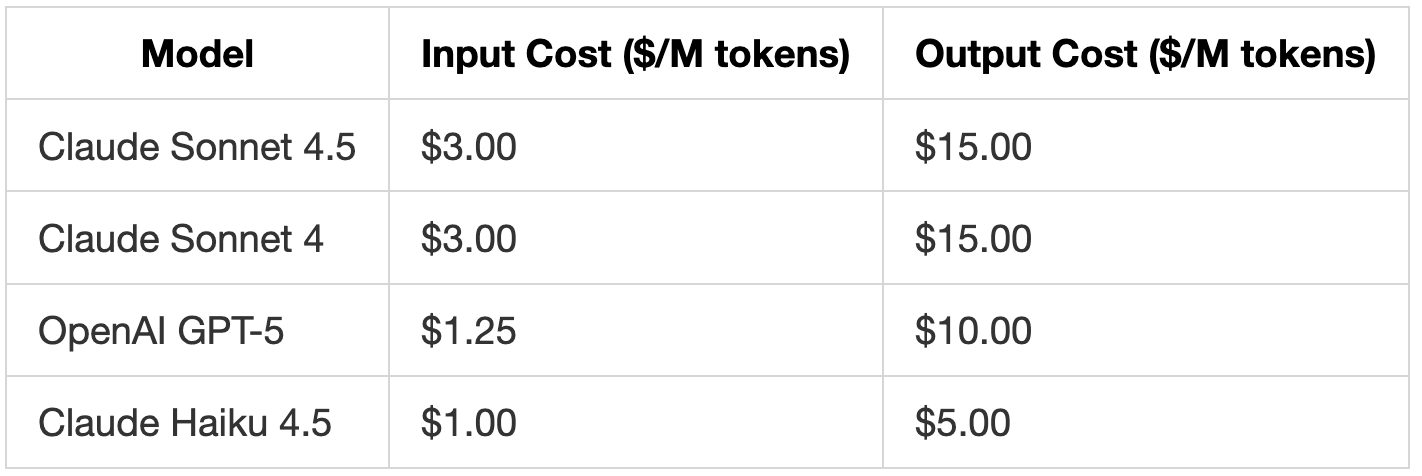

All four are premium models with premium pricing.

This limited selection creates cost inefficiencies: Simple tasks like adding validation or formatting code still consume credits at premium rates. These are tasks you can do quite well with budget models. If you’re looking to optimize your costs, using premium models for simple tasks can be the equivalent of using a supercomputer to run a calculator app.

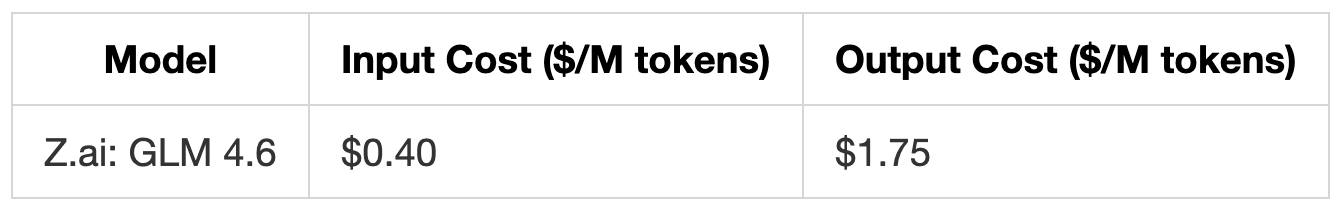

We tested this budget-models-for-simpler-tasks approach directly using Z.ai’s GLM 4.6 through Kilo Code. GLM 4.6 handled the first three tasks for pennies. However, Task 4 was more complicated and the cost with GLM 4.6 rose to $0.52 because it required more iterations.

In a real workflow, you could pick GLM 4.6 or similarly small models for simple tasks where they would be significantly cheaper than more powerful models such as Claude Sonnet 4.5 or OpenAI GPT-5 when you know the task is more difficult.

Kilo Code provides access to hundreds of models from multiple providers. You can use budget models for routine work and state-of-the-art models for complex problems.

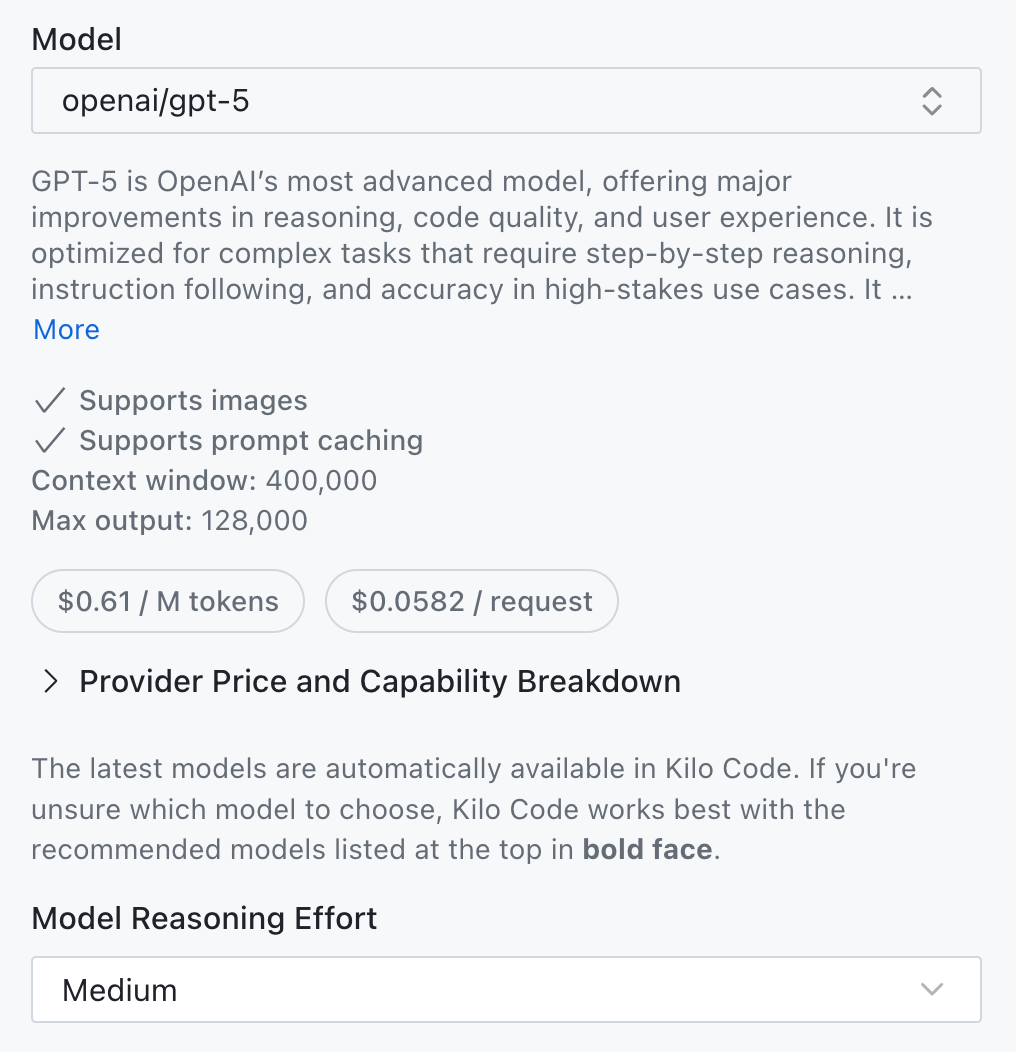

It’s also worth noting that the model limitations in Augment Code go beyond just model selection. You also cannot adjust reasoning effort levels when using reasoning models.

In Kilo Code, you can set models like GPT-5 to high, medium, or low reasoning effort based on task complexity. Reasoning is the process where AI models “think through” problems step-by-step before providing answers, similar to how humans work through complex problems on paper before finalizing a solution. Higher reasoning improves results for complex tasks but costs more. Lower reasoning saves money on simple tasks and typically results in faster response times. Augment Code locks these settings, removing another cost and performance optimization lever.

Real-Time Cost Tracking

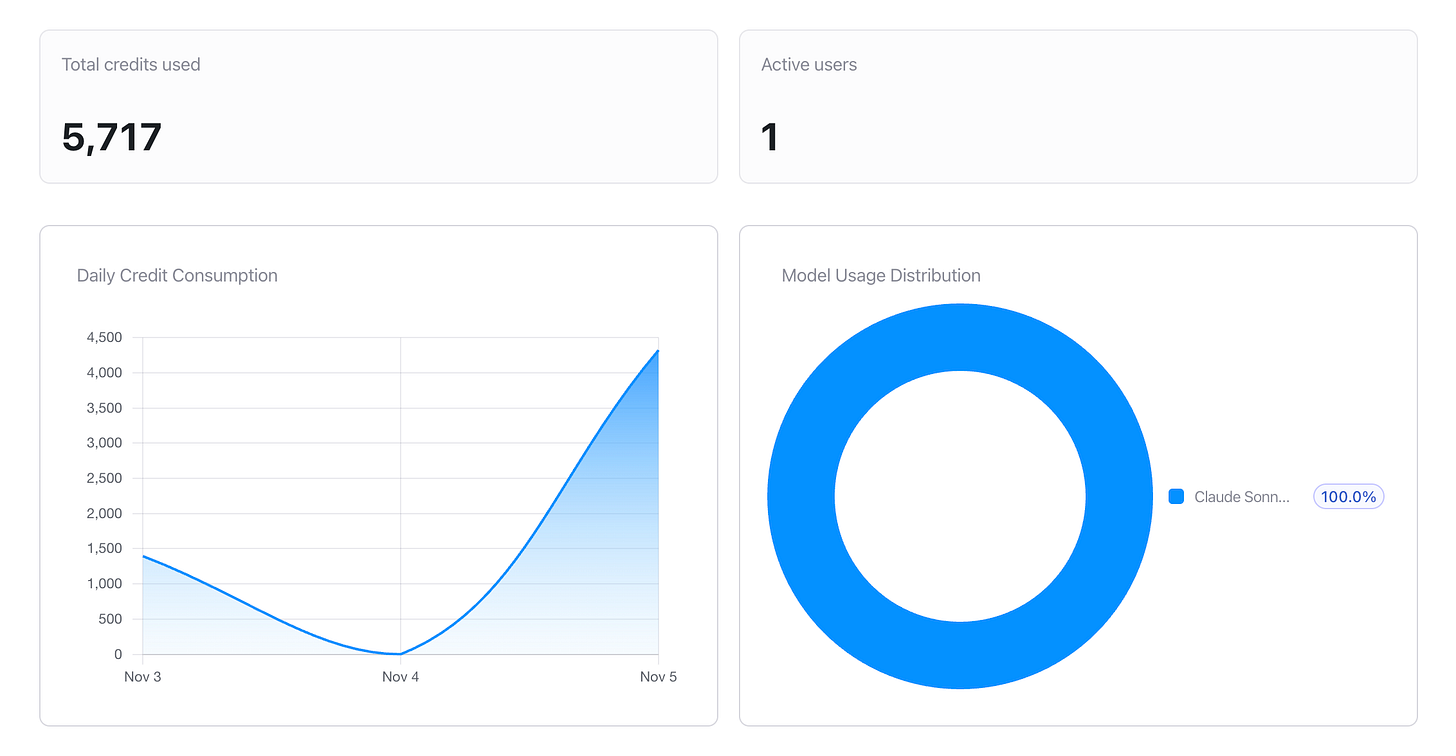

Augment Code provides no per-prompt cost visibility. You see total usage in your analytics dashboard (daily consumption and model distribution) but you cannot track individual operation costs. This makes it hard to optimize your workflow in real-time.

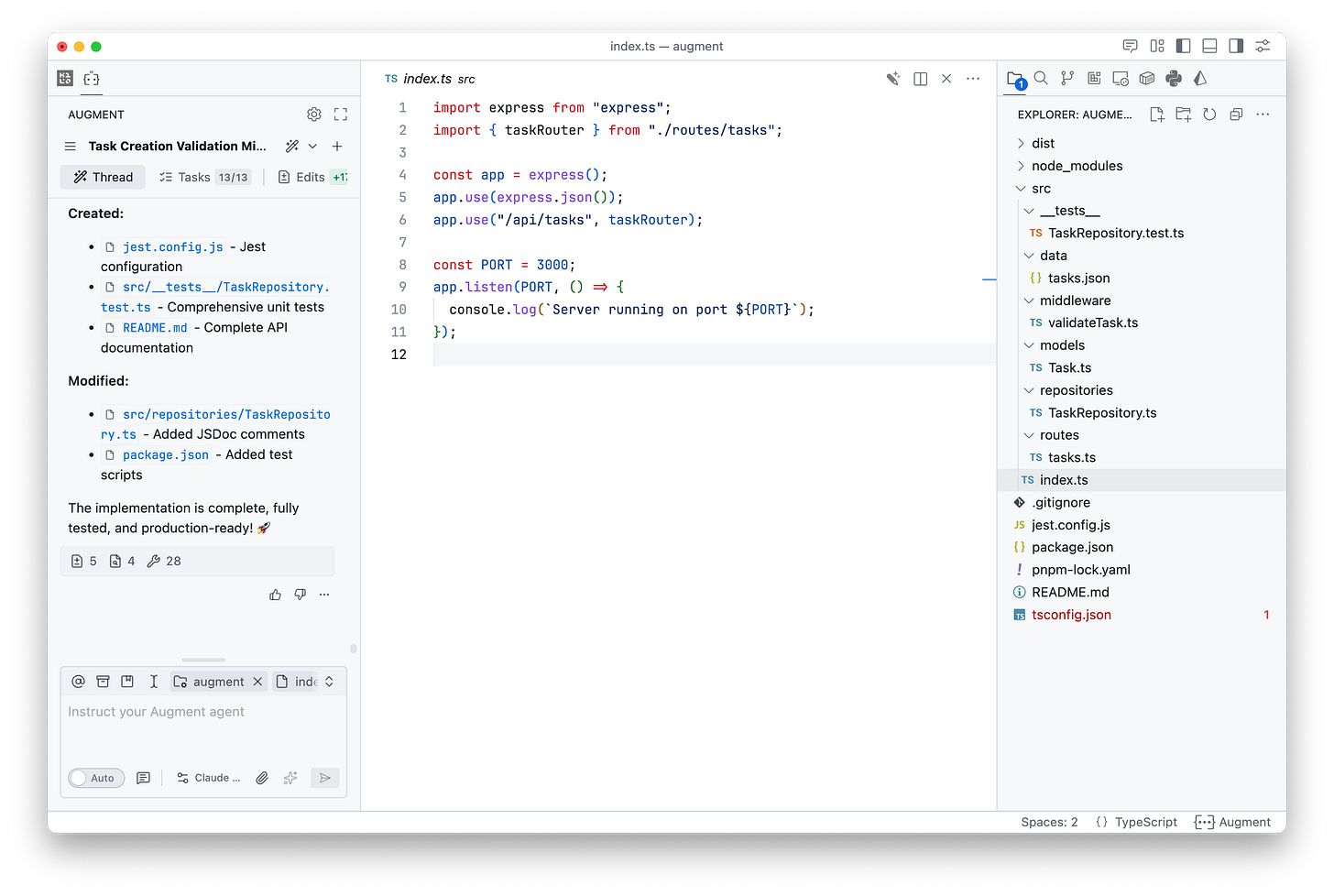

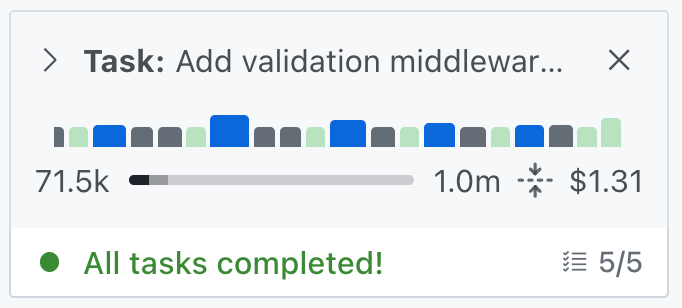

In comparison, Kilo Code displays comprehensive cost and usage data during every session:

Running cost total at the top of the chat

Per-message cost breakdown

Visual progress bars showing AI reasoning steps, checkpoints, and tool usage

Exact token counts for cost verification

During coding sessions, context management goes beyond cost. Models typically perform worse and slow down after certain context usage thresholds. When you can see your context usage, you know when to condense the context or start a new chat to save on costs and improve speed and the quality of the responses.

Credits vs Token-Based Pricing

The credit system creates fundamental constraints compared to token-based alternatives:

Credits (Augment Code):

Expire monthly with no rollover

Must buy upfront without knowing usage needs

Hide actual costs (519 credits equals $0.26, but you’d never calculate that mid-task)

Lock you into fixed monthly plans

Cannot compare prices across different tools

Token-Based with BYOK (Kilo Code):

Pay only for actual usage

See costs in real dollars

No expiring balance

Switch models per task based on complexity

Use any provider immediately (subscription or pay-per-token)

Try experimental models during free periods (like MiniMax M2 or Grok Code Fast 1 currently free in Kilo Code)

The credit system solves Augment Code’s business challenge (predictable revenue, no rollover liability) but creates friction for developers who need cost visibility and flexibility.

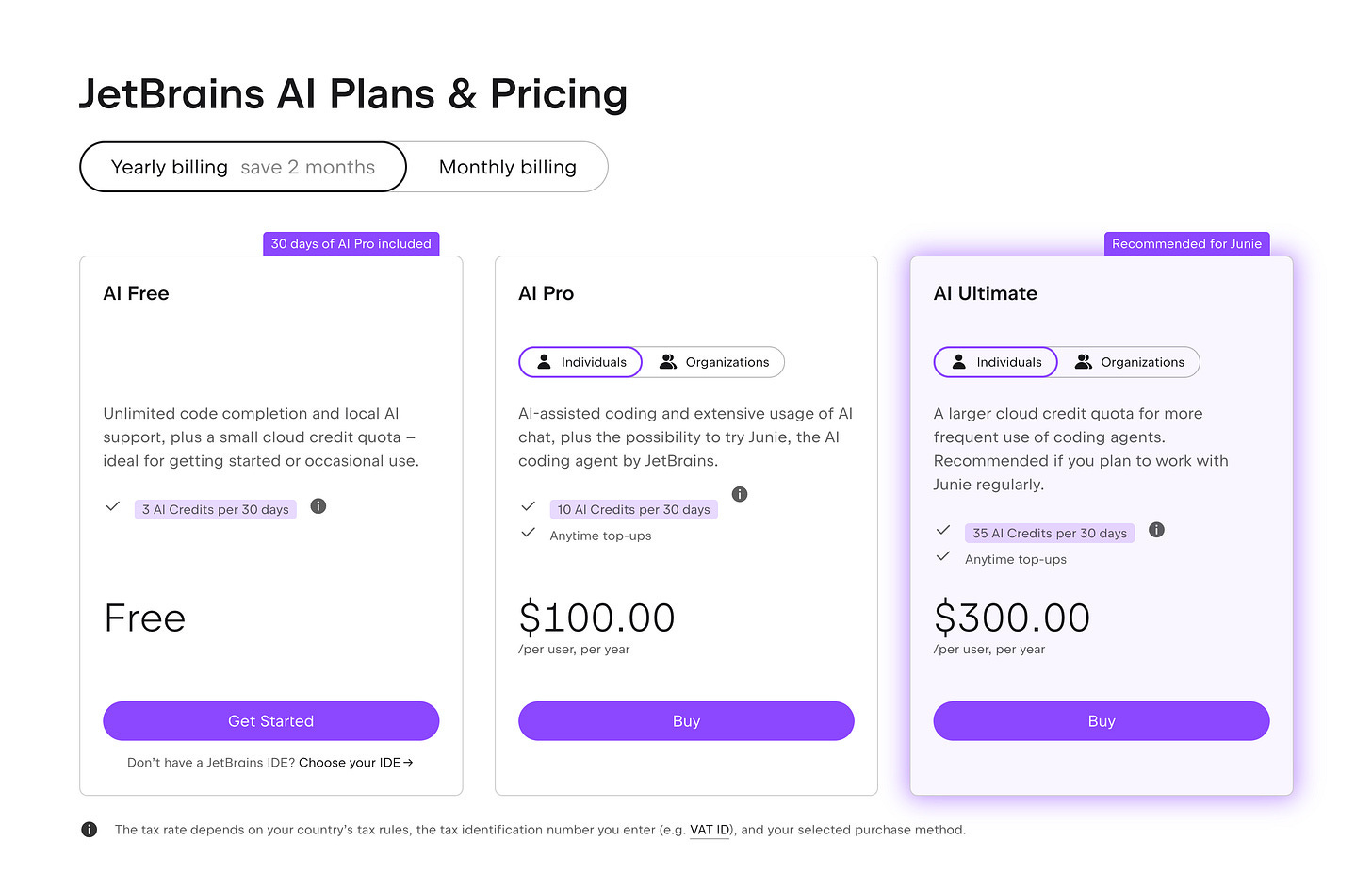

The Broader Context

Augment Code isn’t alone in this shift. Multiple AI coding assistants have recently moved from transparent token-based pricing to credit systems. These credits often don’t map clearly to actual usage. Some count “prompts” regardless of size, others use proprietary calculations that make cost comparison impossible.

This industry-wide pattern creates several problems for developers.

You can’t compare costs across tools when each uses different credit formulas.

Monthly allocations that expire create pressure to “use it or lose it” rather than optimizing for actual needs.

Most importantly, when you don’t know how much things actually cost, you can’t make informed decisions about which models to use for which tasks.

Open-source tools that support BYOK (Bring Your Own Key) preserve the transparency developers need. You see exact token usage in real-time, pay only for what you consume, and tokens never expire. More importantly, you maintain the freedom to switch between models and providers based on task requirements and budget constraints, not vendor lock-in.

The shift to credits clearly benefits AI coding assistant companies: predictable monthly revenue, no rollover obligations, simplified billing. But it reduces developer control over both costs and tool selection.