This Week in Kilo Code: GPT-5.1, Provider Upgrades, and CLI Improvements

Extension versions 4.119.1 – 4.119.6 | CLI versions 0.4.1 – 0.4.2

Welcome back to the weekly product roundup! This week brings full support for OpenAI’s newly released GPT-5.1 model family, along with some ease-of-life fixes for the CLI, and several provider-level enhancements inside the extension.

GPT-5.1 Now Available in Kilo Code

OpenAI has released four new models—and all of them are now fully supported across the Kilo Code ecosystem:

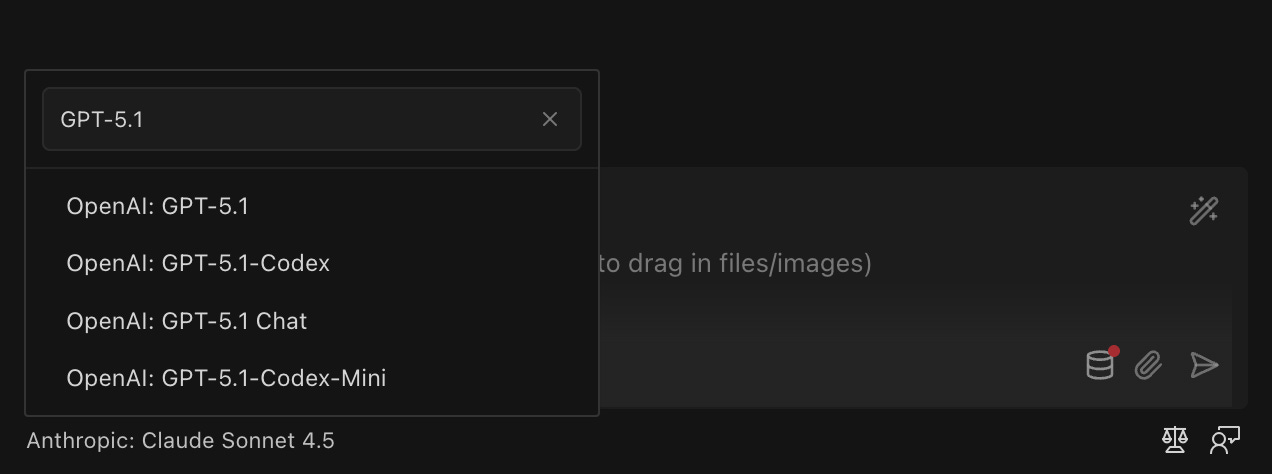

GPT-5.1 (400k context)

GPT-5.1 Chat (200k context)

GPT-5.1-Codex (400k context)

GPT-5.1-Codex-Mini (400k context)

You can use them through:

The Kilo Code Gateway (where your first $10 top-up gets you a +$20 bonus), or

Your own OpenAI API key

Just search “GPT-5.1” in the model selector, and you’ll see the full list:

What’s New in GPT-5.1

GPT-5.1 is optimized to:

Use fewer thinking tokens on straightforward tasks, meaning snappier responses & lower cost

Use more deliberate reasoning on complex tasks, meaning higher reliability

Improve significantly on math, coding, and competitive programming benchmarks

Additional improvements include:

“No reasoning” mode (

reasoning_effort=none) enables GPT-5.1 intelligence at GPT-4.1 speeds24-hour prompt caching for lower latency & lower cost on long-running sessions

GPT-5.1-Codex & 5.1-Codex-Mini

GPT-5.1-Codex: Incremental improvement to GPT-5-Codex

GPT-5.1-Codex-Mini: ~4× more usage allowed, with a small capability tradeoff

Suggested combo: GPT-5.1 for planning, Codex for execution

Extension

Improved Search, Execution, and Tool Calling Stability

This week includes several meaningful improvements to reliability and responsiveness:

Fewer edge-case hangs during long-running operations

More predictable behavior when generating and applying diffs

More consistent search and tool-calling results

Better schema and state handling across large repositories

Provider & Model Enhancements

GPT-5.1 models added to the OpenAI provider

LiteLLM now supports native tool calling and loads more reliably

Fireworks now includes Kimi K2-Thinking

Doubao adds the doubao-seed-code model

MiniMax M2’s latest version is supported with interleaved thinking and native tool calling enabled by default.

Synthetic provider now defaults to JSON tool calls

Z.ai improvements for JSON-style tool calling

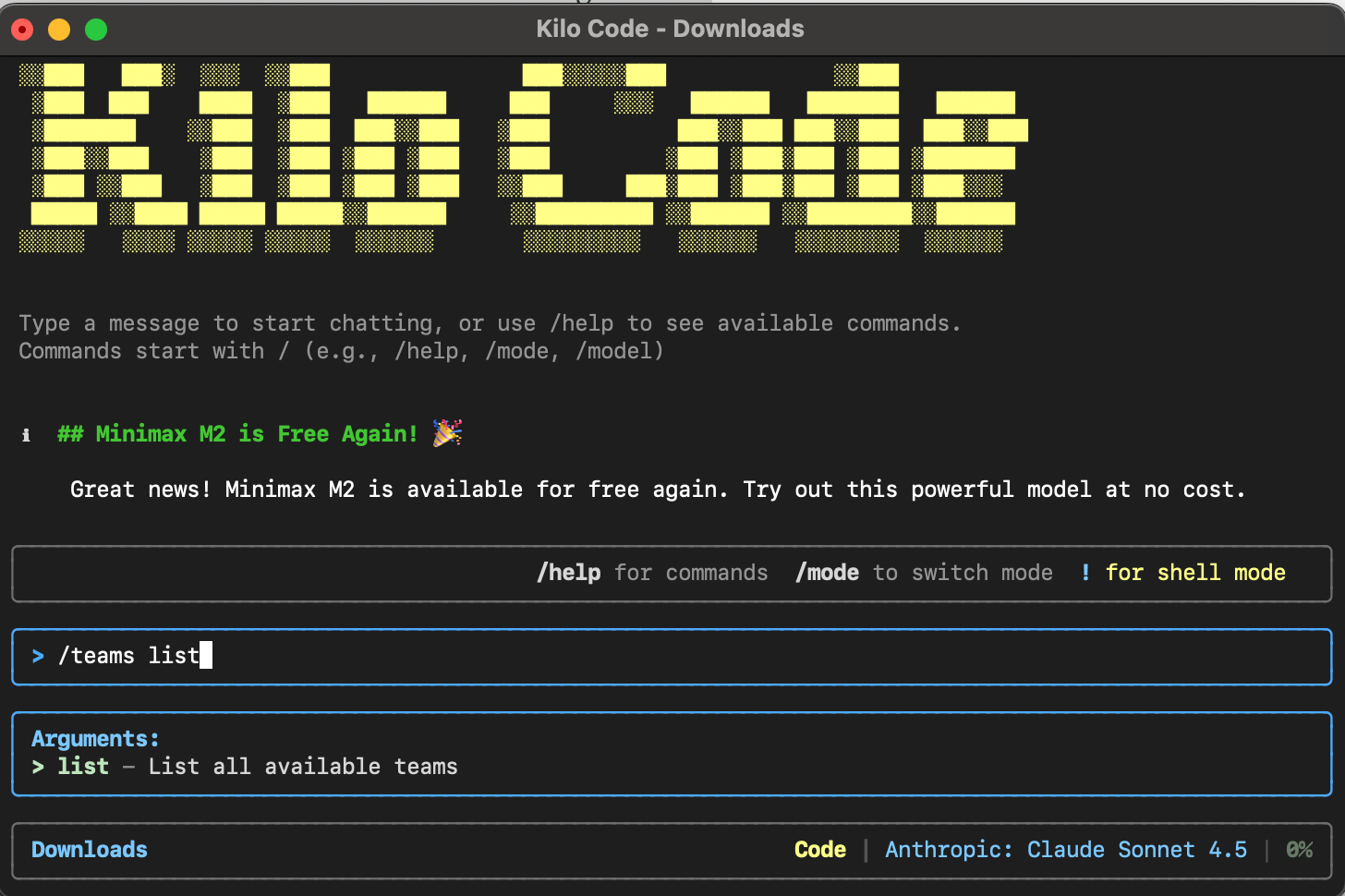

CLI

Fixes & Improvements

The latest released versions focus on important reliability fixes:

Added

/teams listsubcommand to switch between personal workspaces and OrganizationsImproved OpenAI-compatible provider configuration

Improved Auth Wizard

Install the latest CLI to use Kilo right inside the Command Line:

npm install -g @kilocode/cliView All Latest Releases

CLI: 0.4.1 • 0.4.2 • 0.5.0 • 0.5.1

Extension: 4.119.1 • 4.119.2 • 4.119.3 • 4.119.4 • 4.119.5 • 4.119.6