On August 28th Anthropic will implement new Claude Code weekly limits aimed at mitigating abuse spikes caused by power users “running Claude Code 24/7” and “reselling accounts”. Weekly rate limits alongside existing 5-hour limits raised concerns in the builder community and are expected to be welcomed with a backlash based on the lukewarm reception in the rate limit megathread on Reddit.

Anthropic justified the new weekly rate limits by pointing to users who were running Claude continuously around the clock, and discovering some users consumed tens of thousands of dollars in compute while paying just $200 monthly subscriptions. They also cited policy violations such as account sharing and reselling access as contributing factors that were impacting system resources. According to Anthropic, these new restrictions were necessary to maintain an equitable experience and preserve system capacity for all users, rather than allowing a small percentage of heavy users to consume disproportionate computational resources. They have a point regarding power users getting more and more creative with their usage. Recently Claude Code in the vibe coding community evolved into a new developer status symbol: usage-based leaderboards.

The leaderboard phenomenon

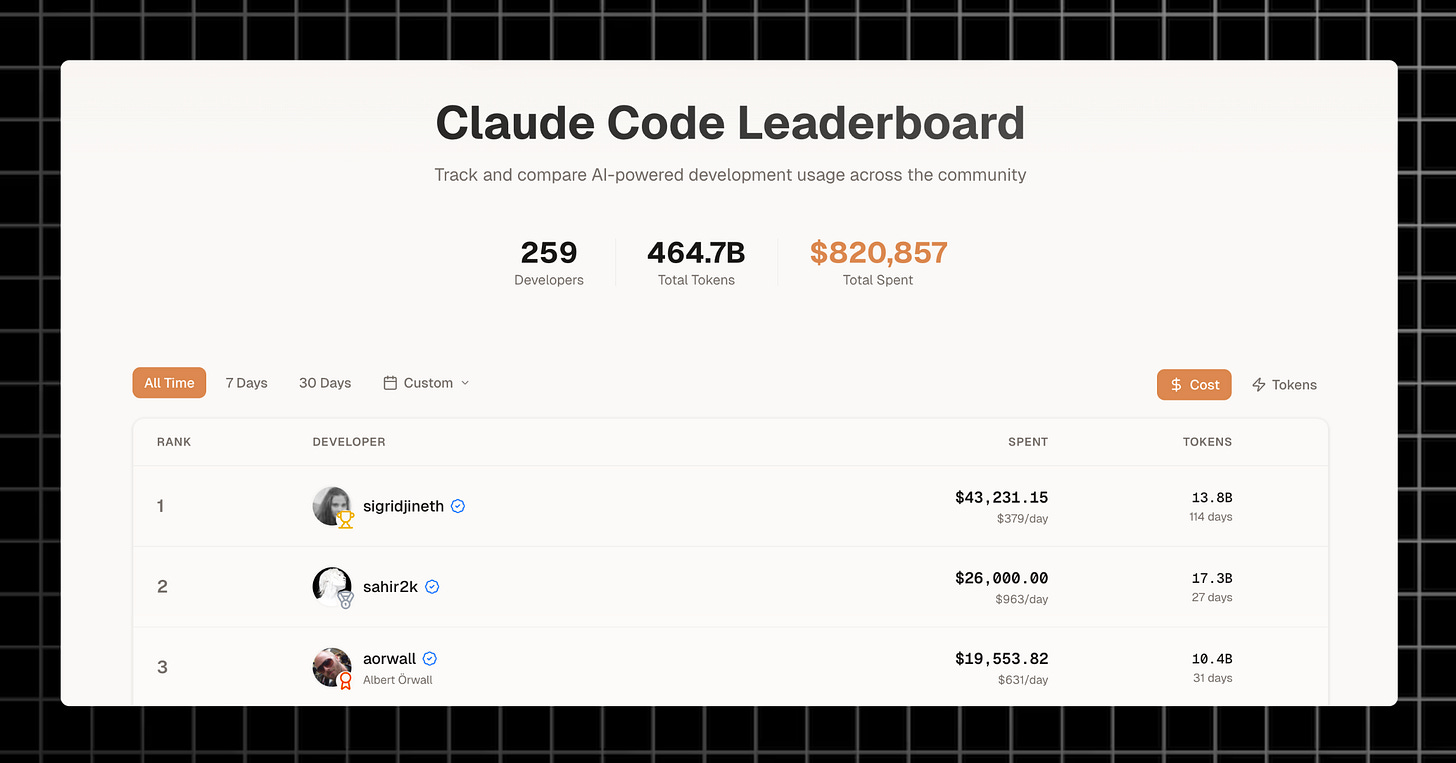

The programming community has always found ways to signal status and productivity, but AI coding tools created an entirely new flex: token spending. Developer leaderboards like Viberank.app now showcase monthly AI bills, with the top spender hitting a $963 daily average!

This represents the latest evolution in programmer status signaling, moving from GitHub's green commit squares to raw inference costs. The gamification makes sense - developers have consistently embraced competitive platforms to showcase what they are building and token usage provides a quantifiable metric for the new AI-assisted productivity frontier.

The success of the ccusage CLI tool with 7k stars on GitHub, with the first commit in May 2025 shows a compelling story of spend tracking. Claude Code power users were for the first time able to track and visualize code token consumption directly from the terminal with a simple command:

bunx ccusageThen leaderboards such as Viberank emerged, transforming what was once private spending into public competition. But this spending arms race collides with economic reality - the same power users driving these leaderboards are hitting the rate limits that make $200/mo plans unsustainable…

Rate Limits vs Leaderboard Champions

The vibe coding leaderboards exposed a fundamental tension in AI pricing: platforms needed to throttle their heaviest users precisely when those users wanted to flex their spending power. Anthropic rate limits even its highest Tier 4 customers by a $5,000 monthly cap (non-Enterprise). With top users on Viberank averaging $350 to $900 daily bills, that means only 5 to 14 days of model usage.

This created the perfect storm for leaderboard gaming. Viberank implemented strict validation rules to prevent fabricated submissions: maximum daily costs of $5000, 250 million token limits and cost-per-token ratios between 0.000001 and 0.1. These limits weren't arbitrary. They reflected the reality that even legitimate power users couldn't sustainably exceed these numbers without hitting platform throttling.

The validation rules inadvertently documented the constraints of current AI infrastructure. When your leaderboard caps daily spending at $5,000, you're acknowledging that consistent higher usage simply isn't accessible, regardless of willingness to pay. The open source tools gained traction precisely because they promised to eliminate these artificial spending ceilings, letting users burn through their own API keys without platform-imposed throttling. With so much concern about abuse and power users using the models for toy projects, there is a strong case to be made about some outstanding contributions of power users:

What are the stories behind the power users Anthropic is rate limiting?

Power users build serious infrastructure

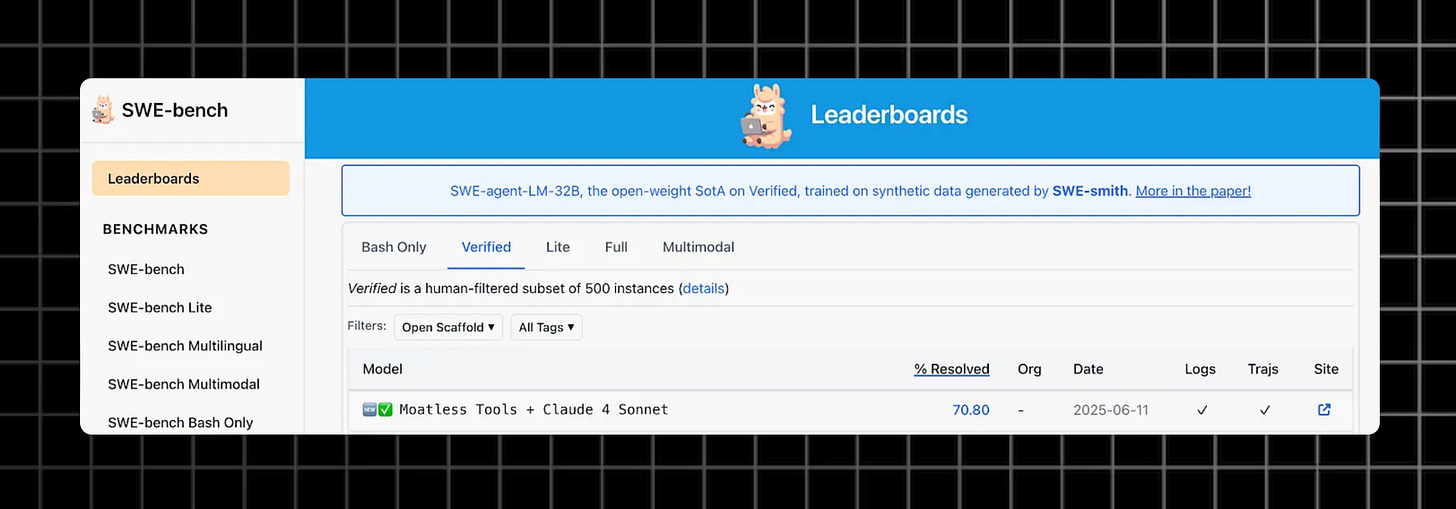

The developers burning through thousands of dollars monthly aren't just experimenting - they're building production-grade AI coding tools that push the boundaries of what's possible. The current #3 all-time spender on Viberank exemplifies this trend: Albert Örwall, creator of Moatless Tools, a sophisticated framework for running evaluations of coding benchmarks that achieved a 70.8% solve rate on the SWE-Bench benchmark at just $0.63 per instance using Claude Sonnet 4.

Örwall built specialized tools for context insertion and response handling across large codebases. The project includes Docker containerization, Kubernetes runner support, multiple evaluation flows, and a comprehensive benchmarking system. This isn't hobbyist tinkering, it's infrastructure-level work that resulted in an ArchiveX paper:

I was skeptical if the models can be used in production. In a way I still am. MoatlessTools was an experiment in how far can I get on the SWE-bench leaderboard –Albert Örwall

Power users build evaluation metrics

Sigrid Jin, software engineer at Sionic AI, used Claude model to build Python Implementation of a Google Deep mind paper : MUVERA: Multi-Vector Retrieval via Fixed Dimensional Encodings with 292 stars on GitHub.

“I'm an engineer, but I do like doing my research. I think I waste most of my tokens on vibe modeling. My main goal was to validate whether a machine learning paper is interesting enough and to do so I need code implementation and a good benchmark” –Jin

Each coding session would cost him 500 USD and before he hit the rate limits he would export the metadata of the current session to a markdown file and upload it to GPT model to process it into a shorter markdown file that will fit within the context window of the next session. He used 8 shared accounts with friends to relay previous history into the new session and ship the project, which placed him as number 1 token spender on Viberank.

The Bottom Line

Anthropic's rate limits reveal a fundamental tension in AI democratization. While protecting infrastructure from abuse, they're also constraining the very power users driving innovation in AI-assisted development. The leaderboard culture isn't just showing off - it's documenting the gap between what developers need and what current pricing models can sustainably provide. As the AI coding space matures, platforms will need to find ways to support serious builders without subsidizing unsustainable usage patterns.

I guess everywhere you go there’s going to be wasteful people making excuses for how they use something. That’s really powerful and making it really hard on other people and not giving a damn about it. There are no meaningful outcomes that actually make any sense when I read about these people trying to make leader boards for using AI because they have way too much money to blow. Where she at they’re probably using grants to do a lot of this stuff that could be used for real research that makes people’s lives that much easier at some point, and while I know there are people doing that these leaderboard folks to me are just flexing through addiction.

KiloCode Promo for Power User? 👀