Kilo Code for JetBrains: Building a link shortener API in WebStorm

By the way, we're live on Product Hunt!

Kilo Code for JetBrains is live on Product Hunt! If you love what we stand for (open source, transparency, and happy users like you!), support us. It takes less than 1 minute.

After 420,000+ downloads on VS Code and Cursor, Kilo Code is now available in WebStorm, IntelliJ IDEA, PyCharm, and all other JetBrains IDEs.

This means that you get the same multi-model AI coding capabilities, now directly in your JetBrains environment.

We tested Kilo Code for JetBrains by building a link shortener API in WebStorm.

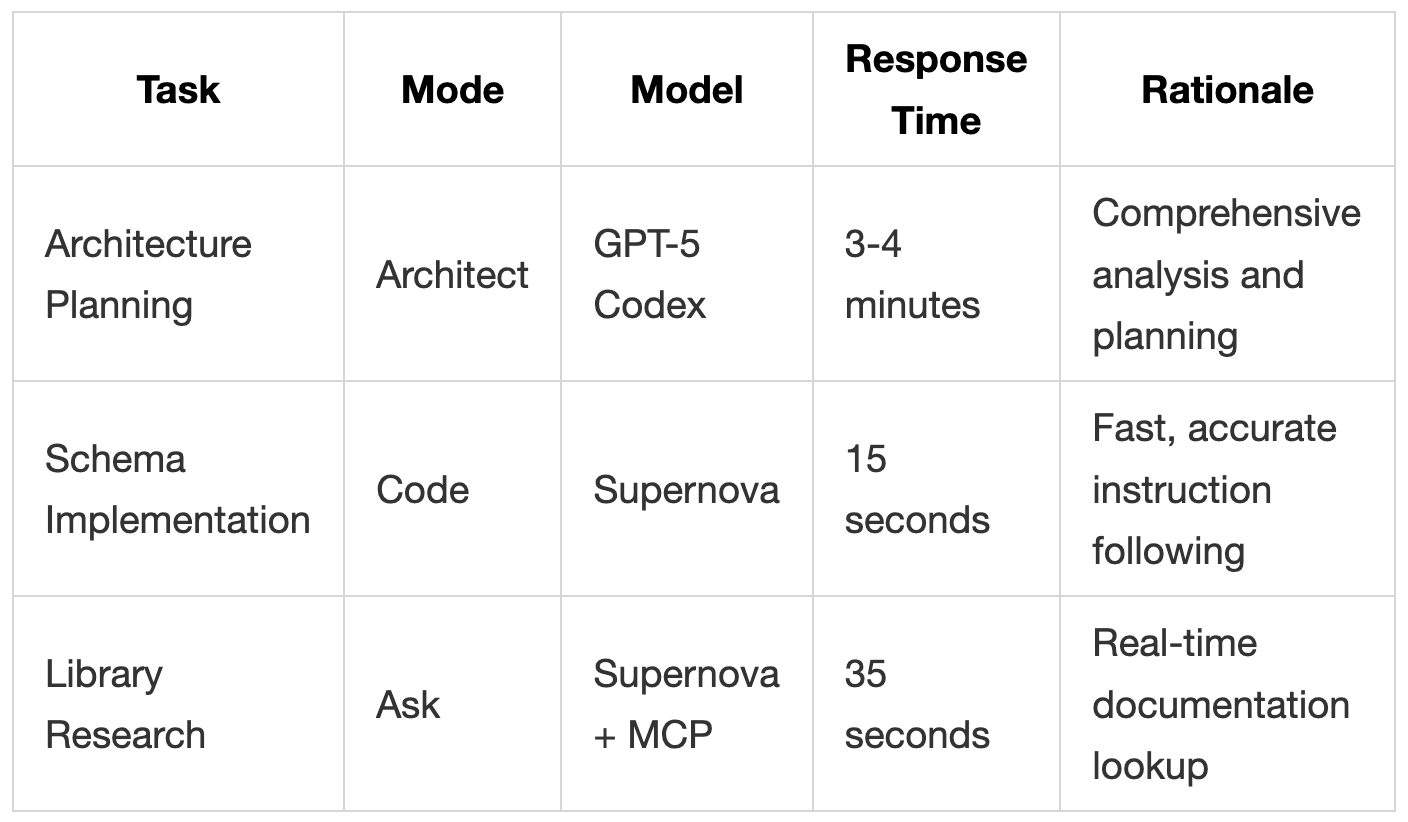

Along the way we used mode switching (Architect → Code → Ask), model selection (GPT-5 Codex for planning, Code Supernova for execution), prompt enhancement (to cover edge cases), and an MCP server integration (to pull live docs).

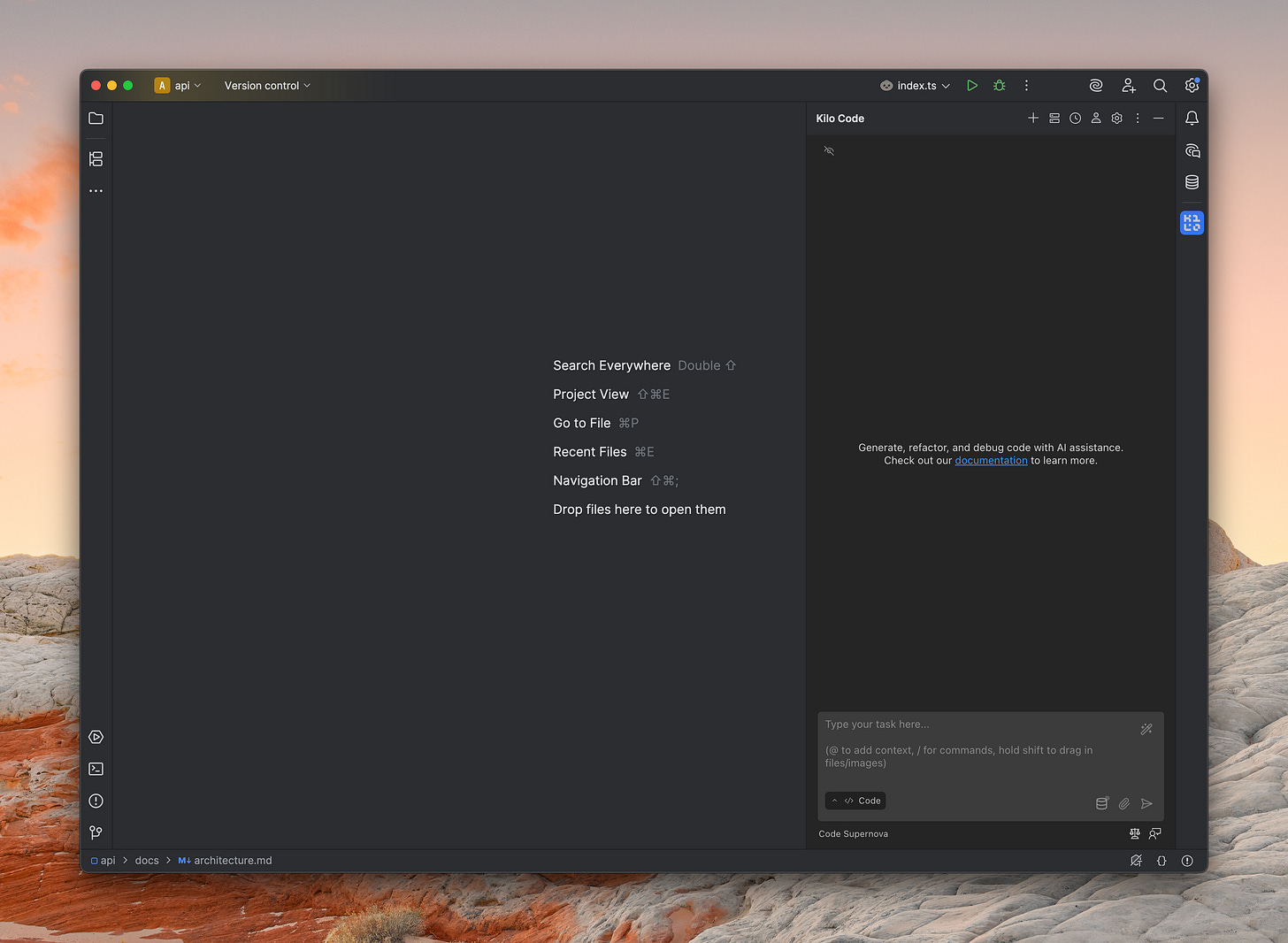

Getting Started Takes 30 Seconds

We’ll use WebStorm (a JavaScript/TypeScript IDE by JetBrains) for this demo.

There are 2 ways to install our plugin:

Open JetBrains Marketplace and search for “Kilo Code”

Open WebStorm and then go to Settings → Plugins → Marketplace (see the video above)

Before using Kilo Code, you need to choose a model. There are 2 setup options here:

Use Kilo Code as a Provider: Use our gateway and get access to 450+ AI models

Use providers directly: Bring your own keys (BYOK) from OpenRouter, Anthropic, OpenAI, Cerebras, etc.

Our plugin integrates with existing JetBrains features and keybindings. Let’s explore more.

Architecture Planning with GPT-5 Codex

We started with GPT-5 Codex in architect mode.

We specifically selected GPT-5 Codex for its superior reasoning and planning capabilities. Unlike execution-focused models like Supernova, GPT-5 Codex excels at thinking through system design, edge cases, and architectural decisions.

Before sending the prompt, we clicked the enhance button (located at the top right of the input area). This feature is easy to miss, but it’s powerful.

“Enhance prompt” transformed our basic prompt into a comprehensive spec, automatically adding click tracking, custom slug handling, database indexing, input validation, migration guidance, and testing examples. These were details we hadn’t even considered.

The generated architecture document included:

Database schema recommendations

API endpoint definitions

Slug generation strategies

Package dependencies

Deployment considerations

These weren’t things we prompted for—GPT-5 understood the domain well enough to surface them proactively.

Implementation with Supernova: Speed and Precision

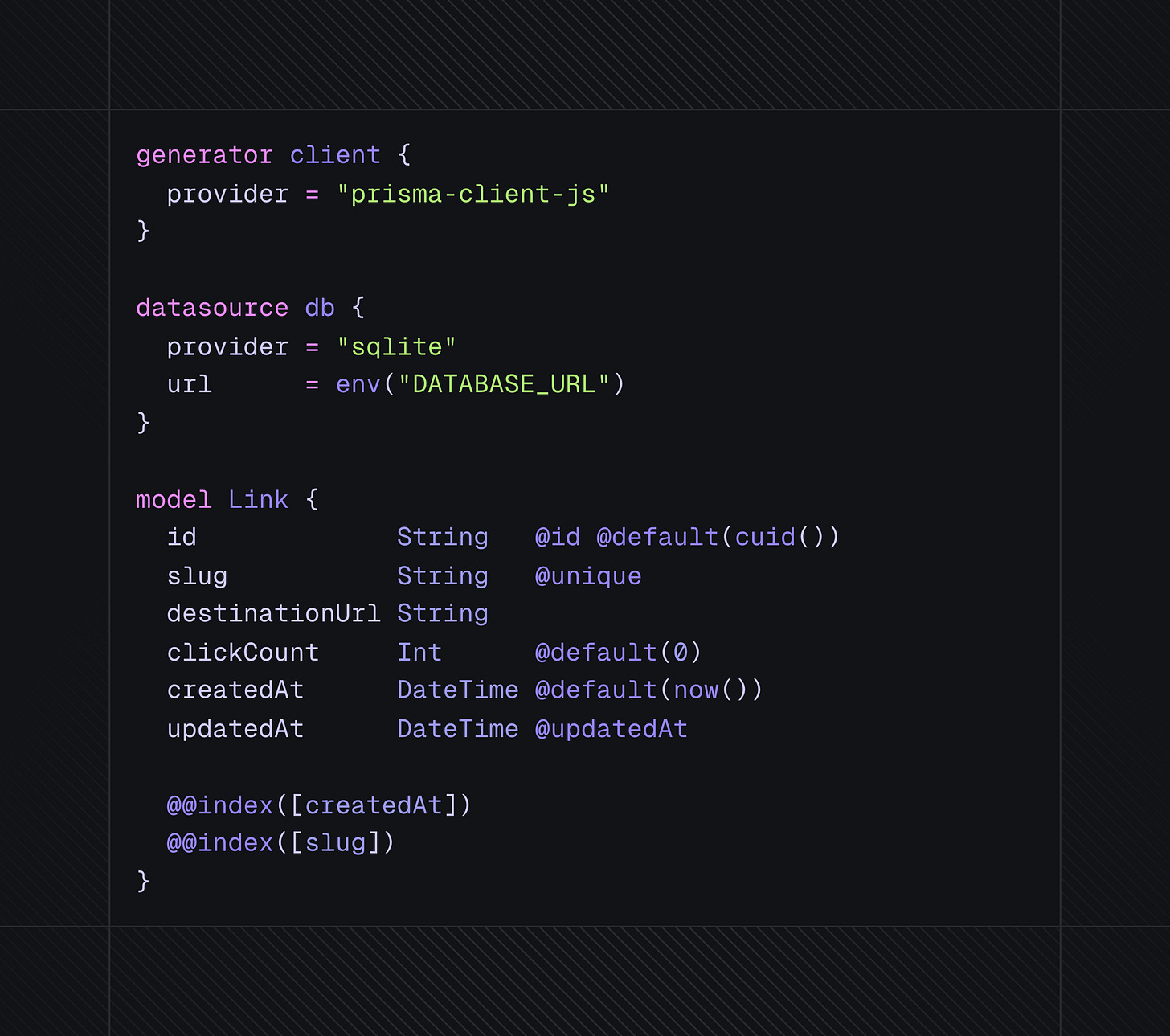

With our architecture document complete, we switched to code mode and selected Code Supernova.

Code Supernova generates code faster than any other model we’ve tested—often 10x faster than GPT-5. But more importantly, it follows instructions precisely without adding unnecessary creativity.

To give Supernova the context it needed, we used the @ symbol to attach two files:

@schema.prisma: The empty schema file where code should be generated

@docs/architecture.md: The complete architecture document from the previous step

This context-passing between models is crucial. We’re using GPT-5’s reasoning as Supernova’s blueprint.

Our prompt was deliberately simple: “Please setup our ‘@/prisma/schema.prisma’ schema based on the ‘@/docs/architecture.md’.” No need to re-explain the requirements—everything was in the architecture document.

Supernova processed the 400-line architecture document and started generating code immediately. We could see the diff appearing in real-time in our editor—fields being added, relationships being defined, indexes being created on frequently-queried fields. In 15 seconds, it was done.

Note the indexes on frequently-queried fields—details that GPT-5 Codex suggested during architecture planning.

The generated schema matched our architecture perfectly. Every field had the correct type, every relationship was properly defined, and crucially, the indexes that GPT-5 had recommended during planning were all there.

There were no hallucinations, no creative interpretations, just clean implementation of the spec. Impressive.

The Documentation Problem: Enter MCP Servers

Kilo Code supports MCP (Model Context Protocol) servers, giving AI models access to external tools and real-time data. Available MCP servers include:

Context7: Package and library documentation

GitHub/GitLab: Repository interactions

File System: Advanced file operations

GitLab: Issue and merge request management

Puppeteer: Web automation

Brave Search: Web search capabilities

SQLite: Database operations and business intelligence

Users can install these from Kilo Code’s settings or create custom MCP servers for specific workflows.

For the link-shortener, Context7 solved a real gap. Models have training cutoffs (Supernova’s is September 2024) and they sometimes hallucinate APIs or blend syntax across similar libraries.

Context7 pulls the latest docs at request time, so the model cites what actually exists instead of guessing—no imaginary endpoints, no mixed runtimes.

We used:

Mode: Ask

Model: Supernova

MCP Server: Context7

Time: 35 seconds

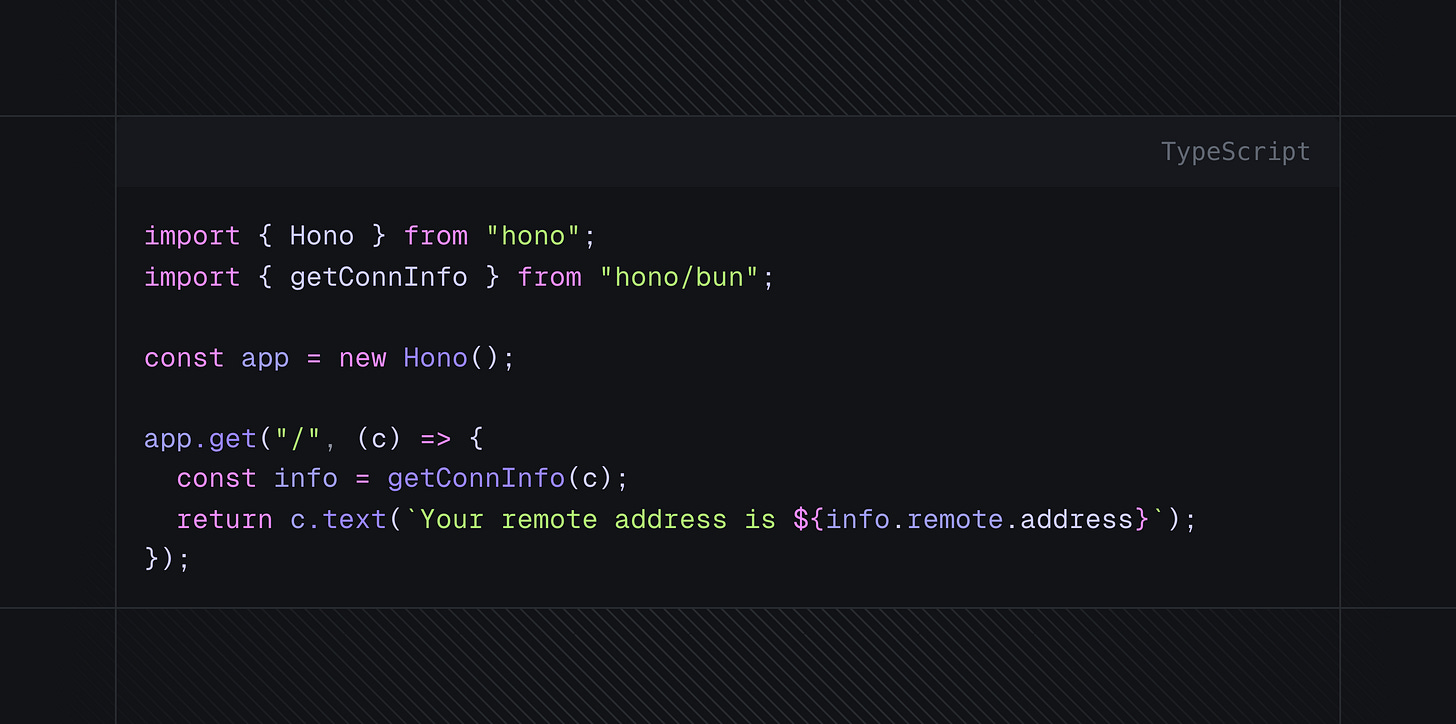

When we first asked how to get the client IP address in HonoJS, Supernova mixed up the syntax between Node.js and Bun runtimes.

After enabling Context7, we tried asking again. This time, Supernova searched through the latest HonoJS documentation and returned the current API:

Without Context7, Supernova leaned on its September 2024 training snapshot and suggested older syntax. With the MCP server enabled, it pulled the latest HonoJS docs and returned the current API instead.

The Right Model for the Right Mode

Our workflow showed how picking the right model for each task makes a difference:

What JetBrains Support Looks Like

The native JetBrains experience means:

Kilo Code appears in a standard sidebar panel

Your usual keyboard shortcuts work

Files can be added as context directly from the project tree

Code is generated into your editor in real time. You can watch the changes in real time and see a diff in a few seconds.

You get AI assistance right inside the IDE without browser tabs or copy-paste “workflows”.

Built for WebStorm, IntelliJ, PyCharm, and the entire JetBrains family

Kilo Code for JetBrains supports:

WebStorm

IntelliJ IDEA

PyCharm

PhpStorm

RubyMine

GoLand

…and all other JetBrains IDEs

The plugin is available now in the JetBrains Marketplace. It’s also free and open source. You can install it directly from the marketplace or from your IDE.

We’re also available in the VS Code Marketplace.