How /newtask can substantially reduce your API credit consumption

It all comes down to taming context windows length.

Before getting started: If you’re familiar with the concept of a “context window”, start from “2. The problem” If not, feel free to start from the beginning.

1. Background

LLMs have an inherent problem: They are forgetful & stateless.

Imagine 2 people talking to each other. Person A says to person B: “Where are my keys?”, to which person B replies: “In the kitchen”.

Naturally, person A would reply: “Great, thank you!”, remembering that “in the kitchen” was an answer to the “Where are my keys?” question.

If person A was an LLM, things would work differently. You’d have to feed the previous context of the conversation to person A, in order for them to understand the “in the kitchen” answer. So you’ll basically have to say this to person A: “Person B replied with “in the kitchen” to your previous question where you asked “where are my keys”.

Welcome to the world of context windows

Think of context windows like an LLM’s working memory. Context windows allow you to continue talking to an AI model, without the model forgetting what you said 3 minutes ago.

Different AI models have different amounts of “working memory” i.e. context window size.

These are the top 3 most popular models on Kilo Code and the size of their context windows:

Claude 3.7 has a context window of 200,000 tokens

Gemini 2.5 Pro has a context window of 1 million tokens

GPT-4.1 also has a context window of 1 million tokens

If you’re not familiar with what a token is, this article from OpenAI provides a good explanation. Think of 1 token as approximately 4 characters in English.

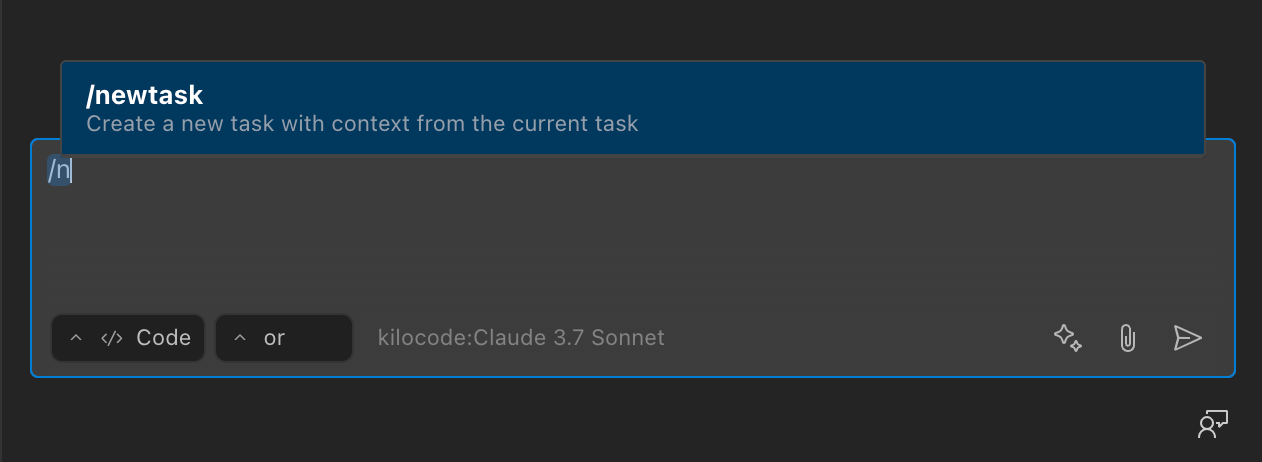

Cline, Roo and Kilo Code allow you to actively monitor your context window size:

In the image above, we’re using Claude 3.7 which (as I’ve mentioned before) has a 200k context window size. So far, we’ve used 9.7 out of those 200k tokens.

Why does this matter at all? Two big reasons:

Stability

Cost

2. The problem

There are 2 big reasons why we should care about context window size: stability and cost.

Stability: LLMs become unstable if you overload their context windows

Cline has repeatedly said that LLM’s performance can dip if you exceed 50% of its context window (eg. using more than 100k tokens in Claude’s 3.7 Sonnet 200k context window).

What that means: Imagine talking to a person who’s holding a lot of information in their head. As you keep asking questions, that person might:

Forget earlier parts of the conversion

You might need to re-explain a lot of information over and over again

The same thing happens with LLMs.

There’s also another problem with overloading LLMs with large context windows: Cost

Cost: The bigger your context window is, the more $ you pay

At their very core, Cline/Roo/Kilo Code are useful wrappers that you use to interact with LLM models.

You pay LLM models depending on the:

Data you send to them (input tokens).

Data you consume from them (output tokens).

Here’s an important fact: When using AI coding agents (like Kilo Code), you’ll probably spend 90% of your money on input tokens.

The reason: You’ll be most likely working on a non-trivial project and have lots files. The LLM needs to know about those files to generate useful output. It also needs to know what it previously did; what worked and what didn’t, and a bunch of other stuff.

All of this data gets added to the context window and is sent to the LLM, which means you’ll spend a lot of input tokens.

To make things worse, LLM providers charge you more, the bigger your context windows get. For example, Google charges $1.25 per 1 million tokens if your context size is below 200k tokens. That price goes up to $2.5 (double!) if your context size goes above 200k tokens.

In other words, if you send more than 200,000 tokens in a single request, you get charged more.

What can you do about all this? There are a bunch of things you can do, and in this article I’ll focus on the easiest one: /newtask.

3. The solution(s)

How do you reduce your context window & pay less to LLM providers? There are a few approaches you could take that are all around the same theme.

Reduce the size of your (input) context window with /newtask

Imagine a scenario: You’re at a point where you wrote 10 prompts in Kilo Code. The agent created a few files for you, wrote you hundreds of lines of code and deployed the feature you want.

You now want to start creating a new feature. At the same time, you don’t want to re-explain things to the LLM; you already spent a lot of time explaining quirks and what are the actual conventions in your project. You want the LLM to “remember” all of that so you don’t have to waste time re-explaining things.

/newtask to the rescue: At a nutshell, /newtask takes your existing context and “transfers it” to your, well, new task. But how does that even work?

How it works: One word: summarization. In the background, Cline/Kilo will take everything that you typed and try to summarize it in the best way possible, without leaving out any important details.

Cline/Kilo will then feed this summary into your subsequent LLM requests. This allows for the AI model to be “aware” of what Cline/Roo did, what you told it to do and what were the steps taken/results observed.

Pretty powerful stuff

In practical terms, you should expect ~1-3k of input tokens added to your new task. That’s still way better than having your entire context window from your previous task transferred over.

Note: Another way to manually “summarize” your input context is with /smol. Cline has this and we’ll soon add it to Kilo.

When to summarize your context

There are different opinions about this:

Cline implicitly recommend to do it when you reach 50% of your overall context window size

Some people change their approach depending the model. For example, many people using Gemini 2.5 Pro will use /newtask or /smol when they approach the 200k context size threshold (this is when Google starts charging you double).

If you want a more automatic way to do this, check out this .clinerule (you can import clinerules into Kilo).

Tame context window length whenever possible

Currently, you can do that with “compression”. You can choose manually (or set up an automatic rule) on when to compress: on a new task/current task/etc.

I think these approaches are a good thing; API costs can get really high, really quickly.

One way to safely experiment is by getting our free $20 tier and try out the latest/greatest AI coding models (Gemini 2.5 Pro, Claude 3.7 Sonnet, GPT-4.1, etc.)

Unfortunately, the `/newtask` switch didn't seem to work. It still sends out the same context size and still hit the context size limit error with anthropic. My context has grown 80k in just few prompts. I discovered that kilo included irrelevant files to my prompt.

As far as I understand, this is precisely how the Orchestrator in Kilo Code structures the execution of a task (or project). It familiarizes itself with the OVERALL task, breaks it down into smaller fragments, and then, one by one, uses message commands to assign these parts to the Assistants – such as the Architect, Coder, Debugger, and so on. It seems to me that the same algorithm is being used, isn't it?