GPT-5: Worth the hype?

That's no moon!

GPT-5 has officially launched with “thinking built in,” and early signs suggest it's a practical upgrade focused on incremental improvements rather than dramatic breakthroughs.

The pricing story: GPT-5 undercuts major competitors while delivering stronger performance, making it the most cost-effective frontier model available.

Horizon Beta Was the Preview

Those mysterious "Horizon Alpha" and "Horizon Beta" models on OpenRouter? Many suspected they were GPT-5, and they were right. The performance characteristics and timing made it fairly obvious to experienced developers.

The stealth testing worked. Developers were already calling it exceptional for coding before the official reveal.

What Makes GPT-5 Different

OpenAI describes GPT-5 as their "most advanced model, offering major improvements in reasoning, code quality, and user experience." According to OpenAI, the model excels particularly in:

Frontend development: Dramatically better at UI/UX code generation and debugging

Instruction following: More accurate interpretation of complex, multi-step requests

Intent inference: Better at understanding what you actually want, not just what you wrote

The official release includes three model variants:

GPT-5: The flagship model designed for logic and multi-step tasks

GPT-5-mini: Lightweight version for cost-sensitive applications

GPT-5-nano: Speed-optimized for low-latency applications

Bottom line: GPT-5 shows the biggest gains in technical work—coding, debugging, and complex reasoning—where OpenAI has been losing ground to competitors.

Automatic Reasoning: No More Manual Switching

Key improvement: The model automatically decides when to use deeper reasoning. No manual toggling required.

How it works:

Activates reasoning mode automatically for complex questions

Can be triggered with prompts like "think deeply about this."

Available for direct control via API

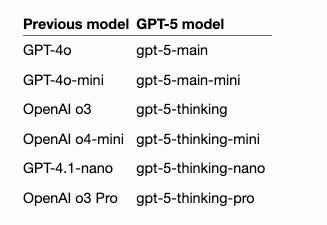

It’s also interesting to note Simon Willison’s notes on the mapping of old-to-new OpenAI models from the model card in his in-depth review of GPT-5:

Why it matters: This puts reasoning power where it's most needed without forcing users to guess when to turn it on.

The Competitive Pricing Advantage

Here's where GPT-5 gets interesting—the pricing makes it the most cost-effective frontier model:

GPT-5: $1.25 input / $10.00 output per 1M tokens

GPT-5 mini: $0.25 input / $2.00 output per 1M tokens

GPT-5 nano: $0.05 input / $0.40 output per 1M tokens

And all of this with a 90% discount on cached input. This could be game-changing for use with large input use cases (like agentic coding tasks).

How it compares:

Claude 4 Sonnet: $3.00 input / $15.00 output per 1M tokens

Gemini 2.5 Pro: $1.25 input / $10.00 output per 1M tokens

Gemini 2.5 Flash: $0.30 input / $2.50 output per 1M tokens

Bottom line: GPT-5 delivers frontier-level performance at mid-tier pricing, while GPT-5-nano competes directly with Gemini Flash on cost. Simon Willison also wrote about this comparison in his blog.

What Developers Are Saying

From weeks of Horizon Beta usage, the consistent feedback points to:

Better context retention: Less need to re-explain project details across conversations

More reliable code output: Higher rate of working, compilable code on first attempt

Faster performance: Reports of ~90-110 tokens/second generation speed

Practical debugging: More accurate identification of issues and suggested fixes

Outside of development uses, OpenAI also added Gmail and Google Calendar integration, voice improvements with customizable speaking styles, study mode for personalized learning, and other personality customization options.

The Verdict

GPT-5 isn't the revolutionary leap some expected (no, AGI is not here today). Based on early evidence, it's the incremental-but-meaningful upgrade that makes existing workflows smoother—at prices that undercut the competition.

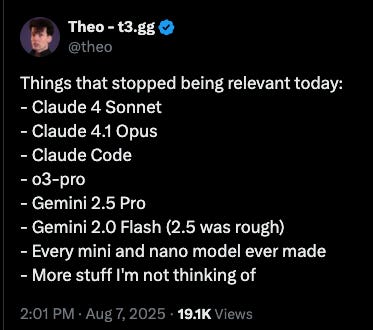

Theo says that’s the end game for other models:

Sometimes evolution beats revolution. But only time will tell.

I bet - agentic will still not outperform or even come close to sonnet 4

I haven't used it for coding, but for my purposes it feels like a huge step down from 4o. Every single question takes ten times longer to "think deeply". There is perhaps a slight benefit in the quality of answers, but it isn't worth the extra waiting that accompanies every single request.