Google's digging a moat

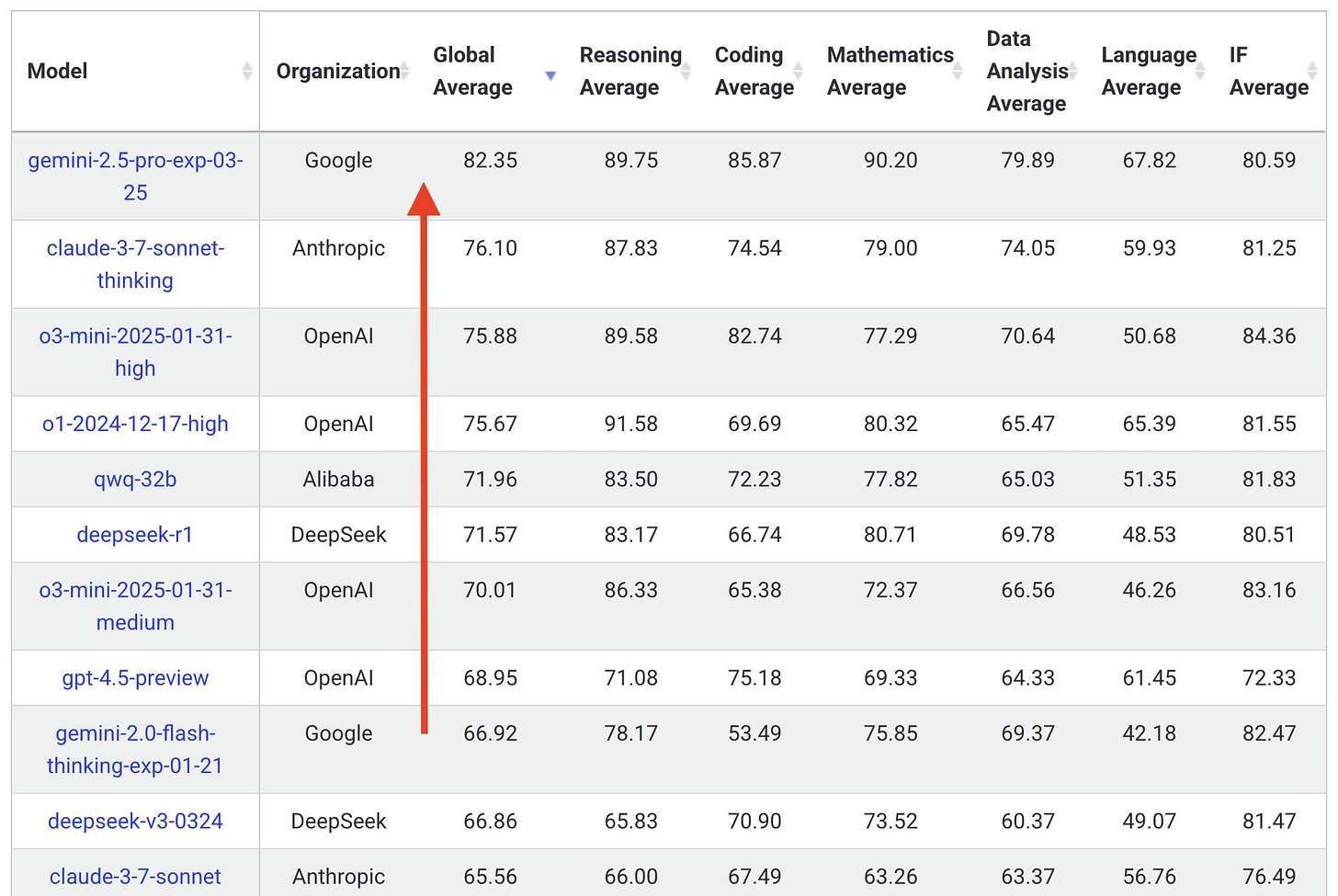

Google strikes back with Gemini 2.5, but Claude keeps half of the lead—for now

With the DeepSeek craze over the past few weeks, it seems that Google is really cooking again. From what we can see so far, now Google might have a moat. They just released Gemini 2.5 and everyone is having a great time hitting API rate limits in Open Router—enjoy, it's free—and yet we don’t know how much it costs to use it.

But hey, let’s not get distracted, it’s a really, really impressive model, with multimodality, function calling, built-in Google search, and a massive 1 million token window (with 2M coming soon!). Also, Gemma 3 with 128K context window and function calling was released just a few weeks ago, so it’s a huge win for the open source community who wants to run agents locally for coding.

We thought it might be fun to do some vibecoding (yes—it’s official now) comparing both models, as well as taking a peek at the future of coding, and what we can infer from benchmarks’ reported results and model limitations. At Kilo Code, we're very excited about models that perform well on coding benchmarks, and Google DeepMind just crushed LiveCodeBench v5, Aider Polyglot, and SWE-bench Verified.

On SWE-bench Verified, a subset of 1,699 SWE-bench tasks that evaluate a model’s ability to solve complex problems from popular open source projects written in Python, Gemini 2.5 just achieved 63.8% while Claude 3.7 is still in the lead with 70.3%.

Back in January, Anthropic shared a blog post titled Raising the bar on SWE-bench Verified with Claude 3.5 Sonnet, and they really meant it, because it’s been one of the favorite models for coding with agentic capabilities (Claude 3.7 Sonnet is our default in Kilo), but the incumbents are working hard to take the lead.

Aider Polyglot is another interesting benchmark in which a model needs to generalize not only in one language but many, such as C++, Java, and Rust. The original problems come from a learning platform called Exercism, which is one of the best ways to learn a new programming language. And as you can see in the picture above, Google just took the lead with 74% (or 68% using diff), versus just 64% from Claude 3.7 Sonnet.

What is surprising, is that Google for almost two years now, has been claiming that its new AI coding engine is as good as an average human programmer (demo) with AlphaCode. We all feel the AGI moment coming but we’re just not there yet. AI is getting better and better at solving technical problems, but there’s still a lot of work to do to solve the surprisingly frequent AI slop.

Competitive programming is another area where Gemini 2.5 shines by one-shotting almost 70.4% of the problems right from LiveCodeBench v5—another benchmark that evaluates problems taken from popular competitive coding and common coding interview problems such as LeetCode, AtCoder, and CodeForces.

That’s why I’m so excited to see both closed and open source models having built-in support for structured outputs, code execution, function calling, and grounding answers with web search. All methods that really boost reliability and model performance. And now that Gemini 2.5 is on par with Anthropic in terms of features, Google just needs to fully embrace MCPs like OpenAI.

In the often overlooked benchmark called ToolComp from Scale, they evaluate tool usage, more specifically, the ability of agentic LLMs to chain multiple tool calls together compositionally to solve tasks in an open problem. Can you guess what model is at the top? Yes, it’s OpenAI O3-mini high (surprising, ah?) But right after that one, it’s Gemini 2.5 Pro Experimental.

Rising from the bottom of all benchmarks, Google now is getting almost all the podiums, and so fast! Two years ago leaked documents told us that both Google and OpenAI will fade out due to rapid innovation and lower costs from the open source community. Which was, by the way, confirmed by an impeccable performance from DeepSeek. But now it seems that Google is finally catching up.

But it’s not just coding benchmarks, on LMArena—a popular benchmark in which users blindly evaluate two models and chose the best answer, Google just did the second largest score jump ever (+40 pts vs Grok-3/GPT-4.5). (The first one was 69.30 points when GPT-4 Turbo took over from Claude-1 on November 16, 2023.) So, it seems that Google is really in e/acc mode now.

But that’s enough of benchmarks, let’s do some vibecoding! What about replicating the offline game Dinosaur Game from Chrome, for testing Gemini 2.5 vs Claude 3.7? We’re going to borrow the original prompt from Google to see if we can replicate their results. We pasted the following prompt in Kilo Code (from the official video):

“Make me a captivating endless runner game. Key instructions on the screen. p5js scene, no html. I like pixelated dinosaurs and interesting backgrounds.”

Claude 3.7

Note how there are obstacles, but no collisions. And did we want pixellated mountains (that make you nauseous)..?

Gemini 2.5

The Gemini version is a bit more pleasant on the eyes, but is missing the obstacles (which are present in the original video). Overall, not bad!

But judge for yourself: check out the code here, or just binge watch the Coding examples that Google DeepMind released on YouTube.

Congrats to the whole Google DeepMind team, you've just started to build a moat. 🏰