GLM-4.6: A Data-Driven Look at China's Rising AI Model

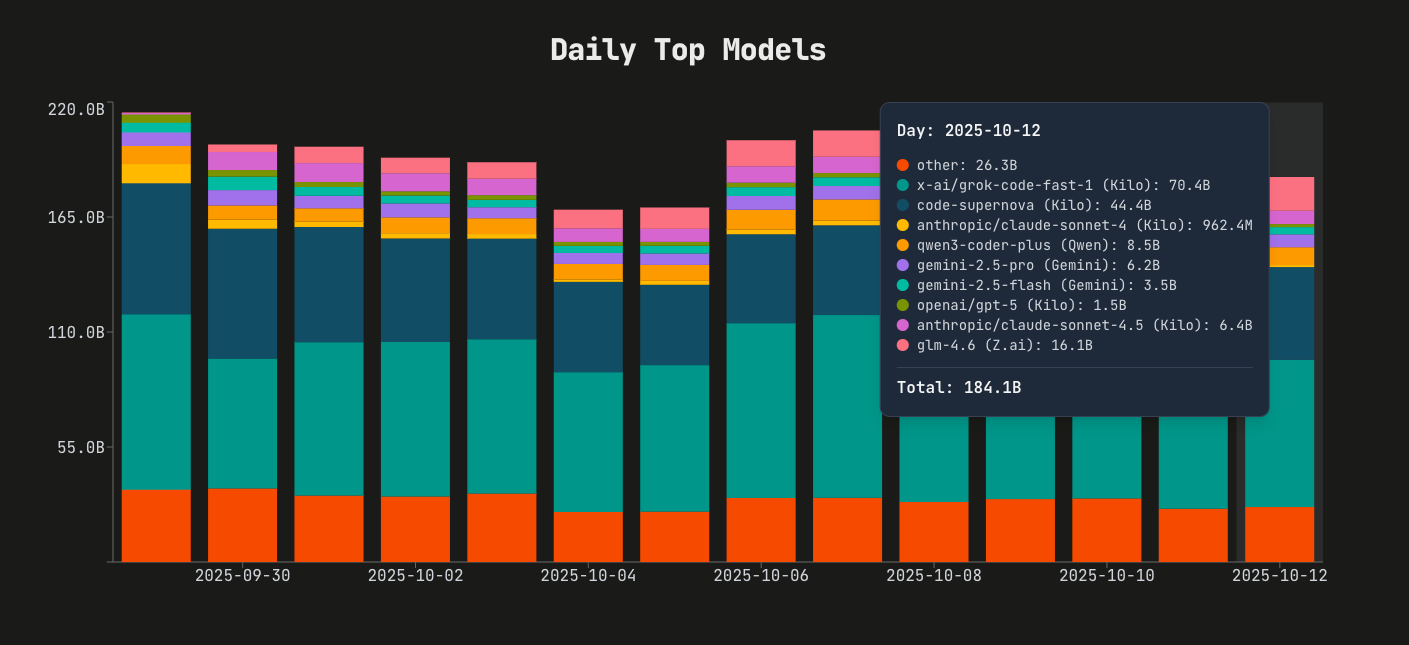

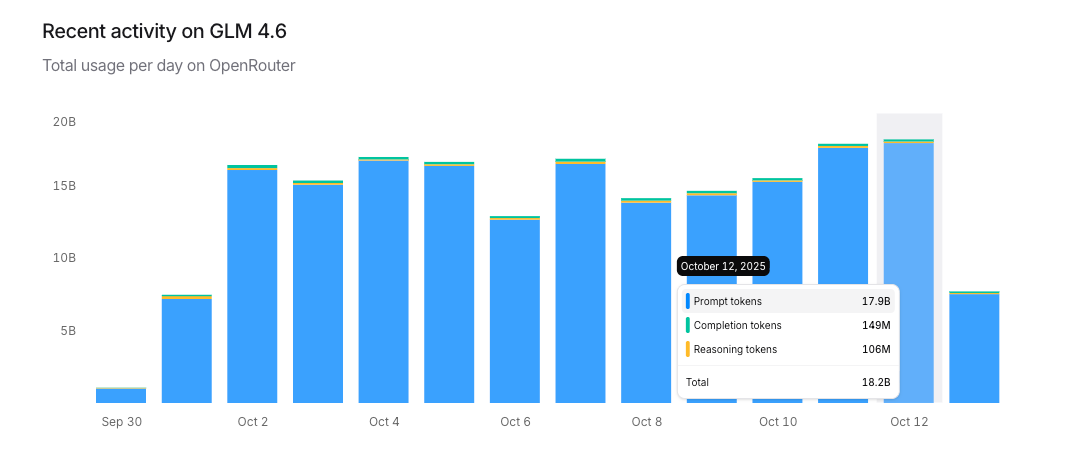

A Chinese AI model went from 168 million to 15.9 billion tokens in 12 days—a 94-fold explosion that nobody saw coming.

On September 29th, GLM-4.6 recorded 168 million tokens on Kilo Code’s leaderboard. Twelve days later: 15.9 billion tokens. That’s one of the fastest adoption curves we’ve seen for any open source / open weights language model.

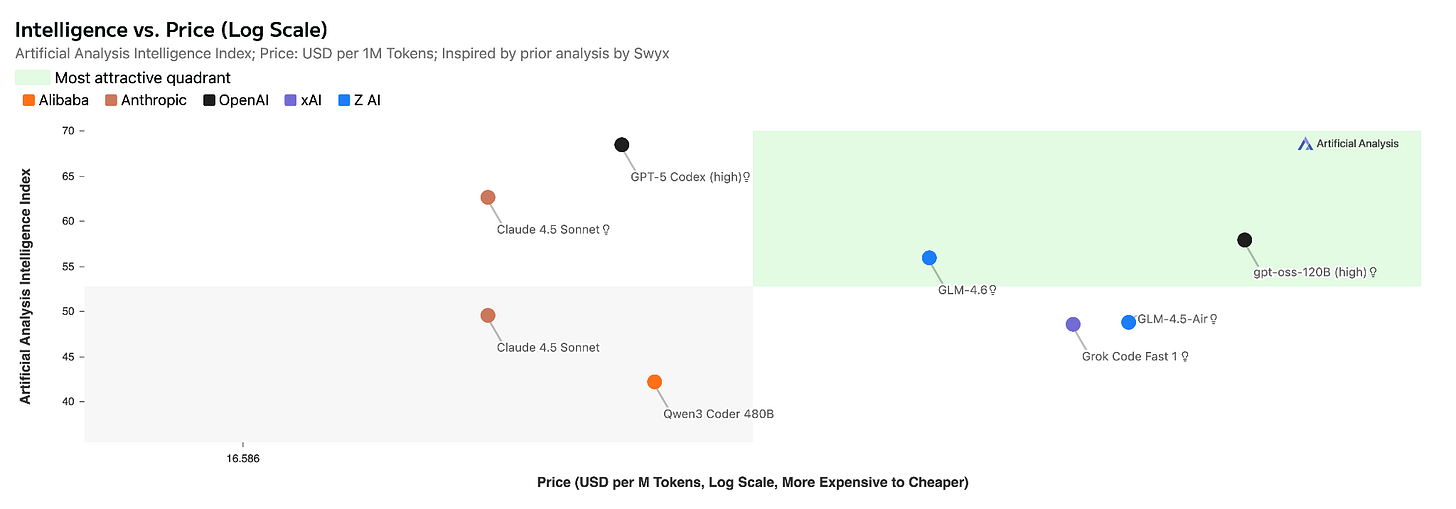

Why it matters: GLM-4.6 from Zhipu AI just proved you can build frontier AI without NVIDIA chips, charge 2% of Western prices, and still rank fourth globally. The AI market isn’t what we thought it was.

Background: Zhipu AI and the Hardware Challenge

Zhipu AI, now branded as Z.ai, is a Tsinghua University spinoff founded in 2019, backed by Alibaba and Tencent. After U.S. export restrictions cut off access to NVIDIA GPUs earlier this year, Zhipu partnered with domestic chip manufacturers Cambricon and Moore Threads. GLM-4.6 runs on these Chinese-made accelerators using FP8/Int4 quantization, enabling the 357-billion parameter model to operate without Western hardware - likely contributing to their ability to offer the service at a fraction of competitors’ prices.

Performance Metrics and Benchmarks

According to the LMArena leaderboard, GLM-4.6 ranks fourth overall and first among open source models. In practical coding evaluations – 74 challenges run in a Claude Code environment - GLM-4.6 achieved a 48.6% win rate against Anthropic’s Claude Sonnet 4.

The model’s specifications include:

357 billion parameters in a Mixture-of-Experts architecture

200,000 token context window (approximately 400 pages of text)

MIT licensed open weights

15% improvement in token efficiency compared to GLM-4.5

Internal evaluations show GLM-4.6 leading on benchmarks like AIME (mathematics) and BrowseComp (web browsing), while trailing Claude 4.5 on complex long-form reasoning tasks like τ²-Bench.

The Pricing Model

Zhipu’s GLM Coding Plan starts at $3 USD per month for the Lite tier, increasing to $6 after the promotional period. The Pro tier runs $15 initially, $30 thereafter. For context, Anthropic’s Claude API charges approximately $3 per million input tokens and $15 per million output tokens. A moderate coding session can easily consume millions of tokens.

The subscription includes what Zhipu describes as “tens to hundreds of billions” of tokens monthly. Even at the standard rates, this represents a 50-100x cost reduction compared to leading Western API services.

What developers actually say

I analyzed discussions across Reddit’s AI communities. The pattern is clear:

The praise:

“Far better than any open-source model in my testing”

Strong on structured coding tasks, especially frontend

Excellent logical reasoning and problem decomposition

Native bilingual support (Chinese/English)

The reality:

Performance gap with Claude 4.5 on complex tasks

One developer: Claude Code finished a Unity game in 6 hours; GLM-4.6 struggled despite consuming millions of tokens

13% syntax error rate vs. 5.5% in GLM-4.5

Occasional language mixing in outputs

The programmatic pattern: Although this model can’t compare head-to-head with Sonnet 4.5, it is “90% there”, according to one Hacker News user. As for Gemini, “it feels more creative than Gemini when it comes to UI, follows instructions better than Gemini, and makes fewer or no syntax errors.”

The deeper story

This isn’t about GLM-4.6 being “better” than Claude or GPT-5. It’s about market segmentation that is a brand new one that we haven’t seen before.

Turns out there’s massive demand for “good enough” AI at commodity prices. If 80% of coding tasks can be handled by a model costing 2% of premium alternatives, the economics become compelling.

What this means: The market is moving toward commoditization faster than anyone anticipated. Not because of technical breakthroughs, but because alternative development paths bypass traditional constraints.

The stakes: This signals a new phase in AI development. Competitive models from Chinese labs, running on domestic hardware, offered at prices that undercut Western providers by orders of magnitude.

What’s next

The 94-fold growth in 12 days isn’t just adoption—it’s validation. Developers are voting with their API calls that accessibility and cost-effectiveness matter as much as raw capability.

Action steps for developers:

Test GLM-4.6 for routine coding tasks

Keep premium models for complex reasoning

Download the open weights if you need data sovereignty

Watch the pricing—promotional rates end eventually

Action steps for companies:

Recognize the “good enough” AI market is real

Budget-conscious teams have viable alternatives now

Hardware independence matters for long-term strategy

The AI duopoly just became a competitive market

The bottom line: We’re watching the democratization of AI capabilities in real-time. GLM-4.6 delivers 80-90% of frontier capability at a fraction of the cost. For many use cases, that trade-off is acceptable.

The question isn’t whether this changes the market. The Kilo Code data already answered that. The question is what happens when the next model does this even better.