Every Engineering Manager's AI Dilemma

“Just use more AI” doesn’t an engineering or business strategy make

Your developers are using AI. That’s not changing. The only question is whether you’re giving them a sanctioned, secure way to do it—or watching it happen in the shadows until something breaks.

The BYOAI era isn’t coming. It’s here. And every day you spend deciding whether to engage with that reality is another day someone else gets ahead.

Every engineering leader I talk to is hearing the same thing from their CEO: “Our competitors are using AI. We need an AI strategy. Figure it out.” The manager nods, walks back to their desk, and realizes they have absolutely no idea how to actually do this well and safely.

Developers Already Chose AI

While leadership debates AI strategy, tech workers already made the decision—they’re using AI tools with personal accounts and expense-reported subscriptions.

Why it matters: This isn’t a gap between adoption and readiness. It’s a chasm. Only 13% of companies feel ready to capture value from generative AI, but developers aren’t waiting for permission.

Reality check: Your developers aren’t being reckless. They’re watching peers at other companies ship features in hours that used to take days. Controlled studies show developers complete tasks 55% faster with AI assistance. The productivity gains are real, so they find workarounds.

The deeper story: Developers sanitize code snippets “just enough” before pasting them into ChatGPT. They debate in Slack whether their API schema counts as “proprietary.” They convince themselves that one small function couldn’t possibly matter.

By the numbers:

The stakes: Every unsanctioned ChatGPT session is proprietary code in an unknown training pipeline. Every personal Copilot subscription is a security audit waiting to happen. Every “just this once” workaround becomes standard practice.

What’s next: Stop debating adoption. Start channeling it. Give your team transparent, secure AI tools with clear boundaries, or watch it happen in the shadows with zero visibility and maximum risk.

Why the Standard Playbook Fails

Most engineering leaders I talk to are stuck in analysis paralysis. They see two options, and both look terrible:

1️⃣ Build internal tooling. Spin up an AI infrastructure team. Hire ML engineers (good luck—they’re the most competitive hire in tech). Set up GPU clusters. Build observability. Manage model updates. Create an internal developer platform. Maintain it forever while the AI landscape shifts every quarter.

2️⃣ Buy a black box. Sign up for GitHub Copilot, Cursor, or whoever has the slickest demo. Accept opaque pricing with “fair use” clauses you don’t understand. Watch rate limits appear mysteriously during crunch time. Get invoiced for five figures because you crossed some invisible threshold the vendor won’t explain until after the fact.

One developer recently described Cursor’s pricing as “bait-and-switch”—promising unlimited usage that quietly degraded once they were locked in.

Both approaches miss what’s actually possible: your developers get the AI superpowers they’re already using in secret, and you get the security, cost control, and visibility you need to sleep at night.

The Mental Model That Works

The best teams I’ve seen have landed on the same mental model: treat AI like a very fast, energetic junior developer.

Smart. Enthusiastic. Has read every public repo and technical textbook. Outputs code at lightning speed.

BUT also: zero context about your specific project. Needs explicit instructions. Makes confident mistakes. Requires code review like everyone else.

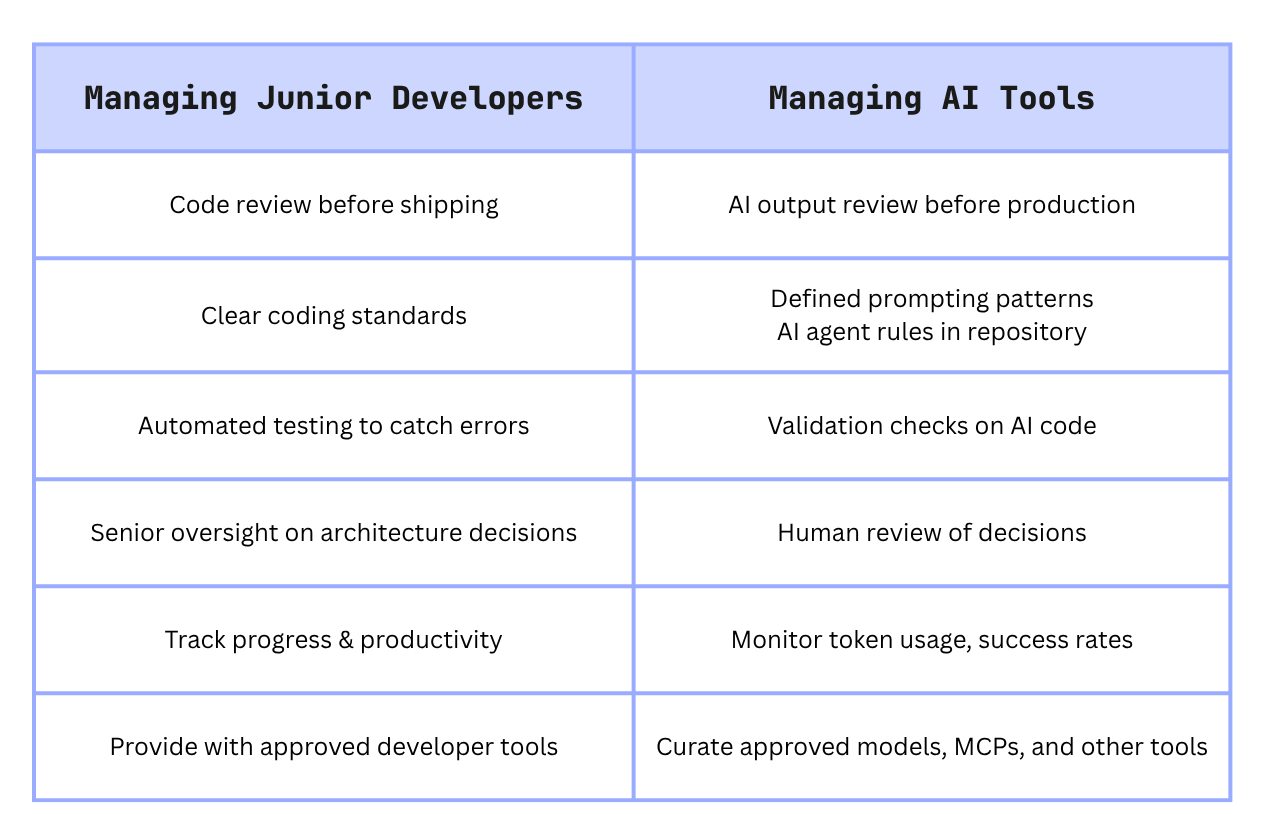

This framing solves most of the “AI strategy” problem. You don’t need a special governance framework for AI. You need the same framework you use for junior developers:

The difference is this junior developer works at 50-120 tokens per second and never needs coffee breaks.

What Engineering Leaders Actually Need to Provide

Once you adopt the ‘very fast junior developer’ mental model, the path out of the engineering manager’s dilemma becomes clear:

📊 Real visibility into usage

Track your AI junior like any team member. See which models tackle which tasks. Know what works. Skip the aggregated dashboards—you need actual data. You wouldn’t let a junior work in isolation. Don’t do it with AI.

🚦 Guardrails, not gates

Junior developers need freedom within boundaries. So does AI.

Approved model lists (like library access for juniors)

Clear data-sharing policies (the “don’t push to main” equivalent)

Automatic sensitive-data flagging (proactive code review)

🔄 Model flexibility

The best model changes monthly. Claude drops Sonnet 4.5. OpenAI ships o3. Some open-source model dominates overnight. Your AI junior needs the latest tools—without forcing everyone to learn new interfaces.

💰 Cost transparency

You know what juniors cost: salary, benefits, equipment. AI should be equally transparent. Forget “500 AI credits.” Show actual per-model costs. If Claude Opus costs more but needs less review, maybe it’s worth it—like paying extra for a junior who needs less mentoring.

Starting From Yes, Actually

Shopify’s approach is right: “Start from yes.” Assume AI will accelerate your team. Work backward to make it safe.

But what does that actually look like in practice?

Acknowledge reality. Your team is already using AI. The conversation isn’t “should we allow this?” It’s “how do we make this work?”

Provide sanctioned tools. Give developers an approved way to use AI that meets security requirements. If the alternative is random personal accounts, you’ve already lost. Just make sure those tools come with what you need - SSO, SCIM, and other security requirements.

Create clear policies, not permission structures. Don’t make people ask before using AI. Give them guidelines on what’s allowed and trust them to follow it. Treat exceptions as opportunities to update guidelines, not punish individuals.

Measure outcomes, not activity. Track whether AI usage correlates with faster shipping, fewer bugs, better documentation. Not whether people are “using it right.”

Invest in AI literacy. The developers who get the most from AI aren’t the ones who use it most. They’re the ones who use it best—knowing when to trust it, when to question it, and how to structure prompts for useful results.

The Choice You’re Actually Making

The problem isn’t whether to adopt AI for software development. That decision has already been made—by your developers, whether you sanctioned it or not. The real question is how to enable AI adoption in a way that doesn’t create more problems than it solves.

I was not expecting to like that article so much. Spot on!

Great article!

I confess I was expecting a :" And did you know that the new kilo code enterprise ships most of that?!"

LoL

Anyways, really great, I will use some of this reasoning in my next team meeting.