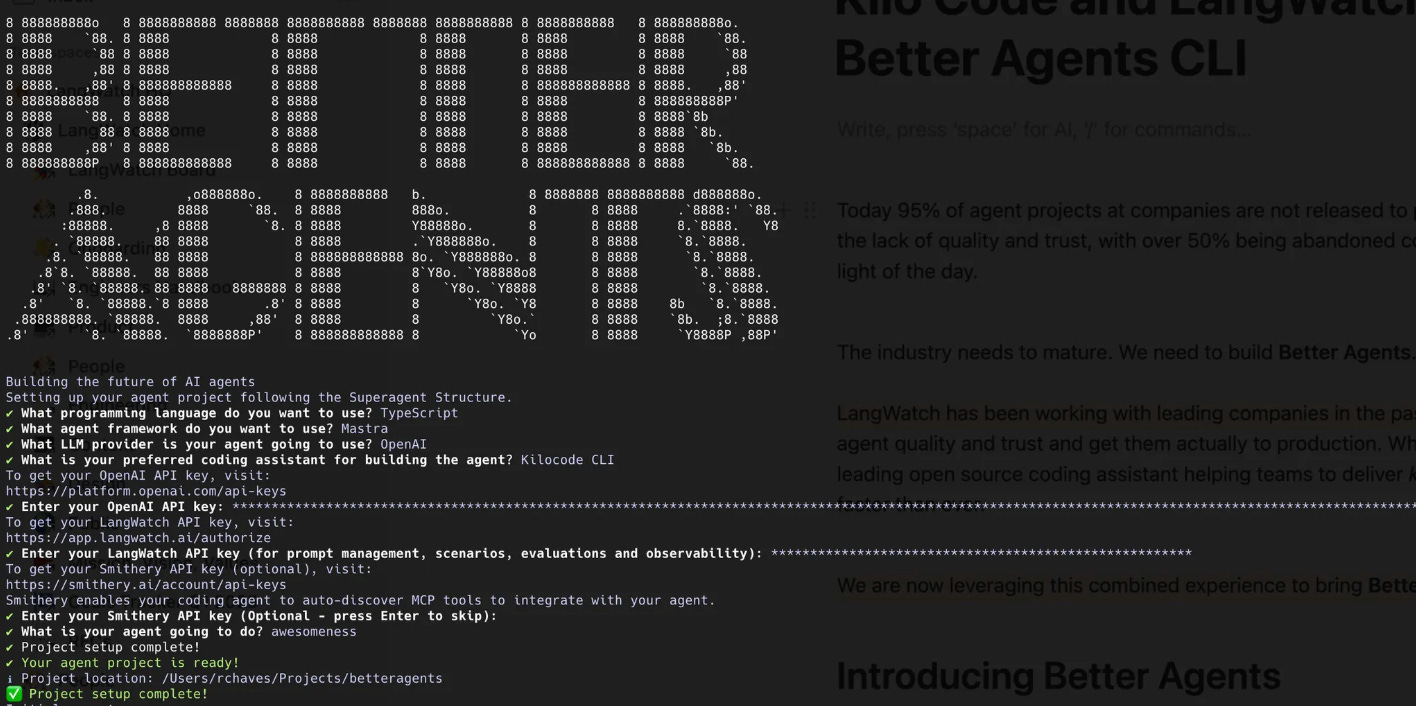

Better Agents CLI with Kilo Code and LangWatch

This is a guest post by Rogerio Chaves, CTO and Founder of LangWatch. He brings 14 years of software engineering experience to solving one of AI’s biggest challenges: making AI agents more reliable, testable, and production-ready.

Today, more than 95% of enterprise agent projects fail to reach production due to a lack of reliability, evaluation discipline, and trust. Most are abandoned entirely, never making it past prototype phase.

The industry needs to mature. We need to build Better Agents.

Over the past two years, LangWatch has worked with leading companies to help them cross the barrier of agent reliability and bring agent systems safely into production, while Kilo Code has been the leading open source coding assistant helping teams to deliver kilos of code with high quality, and faster than ever.

Now, we’re combining this experience to bring you: Better Agents CLI.

Introducing Better Agents by LangWatch

Better Agents is a CLI toolkit and a set of standards designed for building reliable, production-grade AI agents.

Better Agents CLI supercharges Kilo Code, making it an expert in any agent framework you choose (Agno, Mastra, etc.) and all their best practices, being able also to autodiscover MCP tools to augment your agent.

The Better Agents Structure and generated AGENTS.md ensures industry best practices, making your agent ready for production:

Scenario agent tests written for every feature to ensure agent behavior

Prompt versioning for traceability and collaboration

Evaluation notebooks for measuring specific prompt performance

Built-in instrumentation and observability

Standardization of structure for better project maintainability

The Better Agents Structure

my-agent-project/

├── app/ (or src/) # The actual agent code, structured according to the chosen framework

├── tests/

│ ├── evaluations/ # Jupyter notebooks for evaluations

│ │ └── example_eval.ipynb

│ └── scenarios/ # End-to-end scenario tests

│ └── example_scenario.test.{py,ts}

├── prompts/ # Versioned prompt files for team collaboration

│ └── sample_prompt.yaml

├── prompts.json # Prompt registry

├── .mcp.json # MCP server configuration

├── AGENTS.md # Development guidelines

├── .env # Environment variables

└── .gitignore

The structure and guidelines on AGENTS.md ensure every new feature required for the coding assistant is properly tested, evaluated, and that the prompts are versioned.

The .mcp.json comes with all the right MCPs set up so your coding assistant becomes an expert in your framework of choice and knows where to find new tools.

scenarios/ tests guarantee the agent behaves as expected, simulating a conversation with the agent and ensuring it does what is expected.

evaluations/ notebooks hold datasets and notebooks for evaluating pieces of your agent pipeline, such as a RAG or classification tasks it must perform.

Finally, prompts/ holds all your versioned prompts in YAML format, synced and controlled by prompts.json, to allow for playground use and team collaboration.

Get Started

Get started with Better Agents today and generate new agent projects that follow best practices from day one, built for reliability, maintainability, and ready for production from the moment you initialize them.