As software developers, we have a dirty secret: we don’t quite like writing documentation.

We'll spend hours optimizing a function to save milliseconds, but ask us to write a README and suddenly we're "too busy."

Say hello to AGENTS.md—a documentation standard that has the potential to convince even the most documentation-averse developers to actually document their code.

Why? Because now we're writing for AI coding assistants, not humans. /s

The problem with all AI models & coding assistants

If you've worked with Kilo Code (or any other AI coding assistants like Cursor or Claude Code), you've probably experienced this: the AI doesn't seem to get your project's conventions:

It doesn't know you use a specific library for your tests

It doesn't know your team's "all comments should be capitalized" rule.

It doesn't understand your functions shouldn’t be longer than 20 lines

As a result, you end up repeating the same context in every conversation, watching the AI make the same mistakes, and fixing the same issues.

It's like onboarding a new junior developer who’s quite forgetful.

The underlying reason for this is that AI models are stateless; each request is processed independently without access to previous conversations, requiring you to provide the same context repeatedly.

Fortunately, most AI coding assistants (including Kilo Code) have a way to mitigate this problem: rule files.

In Cursor, they’re called Cursor Rules.

In Claude Code, you have CLAUDE.md.

In Kilo Code, you put those rules in a .kilocode/rules directory. You can see where I’m going here…

Every AI coding assistant has its own way of doing things

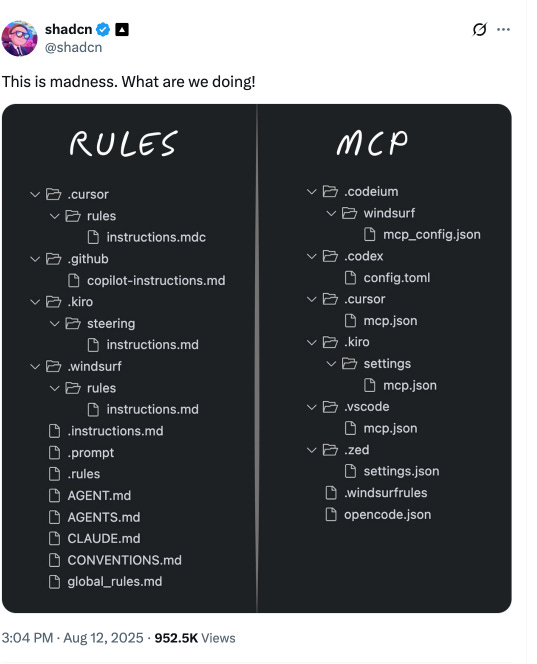

This tweet by ShadCN captures the problem perfectly:

Every AI coding agent has a separate rules file/folder structure.

Rule files are part of the standard project anatomy

These days, rules files are to AI coding what .gitignore files are to Git—if you use AI assistants, you mostly likely have one.

Data shows this: If you do a Github search for .cursorrules, you’ll get 25,000 results. You get 160,000 results for CLAUDE.MD. We’re creating rule files en masse.

So you could argue that rule files have already “tricked” us into writing new docs.

The problem with different rules for different agents is: Change. AI models change (you have a new AI model being released almost every week). AI coding assistants change. And many of us like to use multiple code assistants (ask it to solve something for us, come up with 3-4 versions, choose the best output).

If we use multiple tools for something, we want our “config files” (like rule files) to be portable across all of those tools.

AGENTS.md = README for AI coding agents

At its core, AGENTS.md is simply a rules file; a markdown file that contains all the context an AI coding assistant needs to work effectively on your project.

You can think of AGENTS.md as a project README.md, specifically for AI agents.

This standard was on the front page of Hacker News several days ago and I saw several people commenting about how, ironically, this will make people less lazy of writing actual, useful docs:

People were too lazy to write docs for other people, but funnily enough are ok with doing it for robots.

AGENTS.MD & rule files make us document things we've been too lazy to explain to people

Take a look at a typical AGENTS.md file and you’ll see it containing a bunch of nitty-gritty details that AI agents need:

Project overview and architecture

Build and test commands

Code style guidelines ("use this assert library", "never write comments")

Testing instructions and conventions

Security considerations and gotchas

Commit message formats

Deployment steps

Large datasets or resources locations

Basically, anything you'd tell a new teammate on their first day. Unlike with your teammate, here you get instant gratification; the AI coding agent will get your file and be mindful of everything you said starting from your first prompt.

Here’s a small practical example of an AGENTS.md file:

Why people write rules over READMEs: Instant gratification

Why does AGENTS.md (and rule files in general) have the potential to “trick us” into writing better documentation?

Well, it’s all about simple human psychology: You get immediate feedback & results: You write it once, and your AI assistant immediately becomes more useful. The feedback loop is much longer with READMEs.

Some could argue that it’s also about pushback; AI won’t judge you for any ‘weird conventions’ or ‘hacky workarounds’.

AGENTS.MD is gaining traction

As I’ve mentioned before, the official site claims that over 20,000 projects are using AGENTS.MD. I checked today and that number was over 25,000.

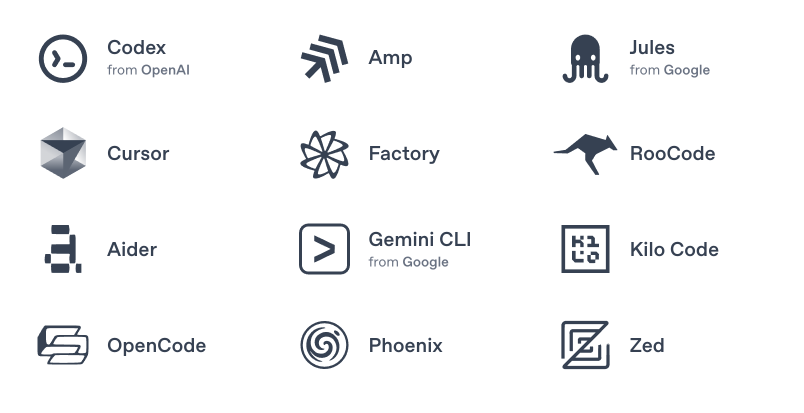

Also, 12 major AI coding assistants have started supporting AGENTS.md, including Kilo Code:

You can have multiple AGENTS.MD

The base implementation is to place a single AGENTS.md in your project root.

If you have a monorepo, you can use nested AGENTS.md files. To do that, place a main AGENTS.md file in the root and additional ones in subdirectories. The closest AGENTS.md file wins (just like .gitignore)

For example, OpenAI's main repository contains 88 different AGENTS.md files. When an agent works on a specific subcomponent, it automatically picks up the most relevant context from the nearest AGENTS.md file in the directory tree.

Summary

AGENTS.md might be one of the most successful tricks ever played on us developers. We're finally writing comprehensive documentation—not because someone told us to, but because it makes our robot assistants more useful.

As AI coding assistants become more prevalent (and let's face it, most of us are already using multiple agents), having a standardized way to provide context becomes crucial. AGENTS.md solves this elegantly: one standard, multiple tools, zero friction.

The irony is fascinating: AI, often blamed for making developers lazy, is actually starting to motivate us to write better documentation. Even if you think LLMs don't dramatically improve coding productivity, they've at least tricked us into documenting our projects properly.

Claude has the command /init to bootstrap its version of AGENTS.md. What is Kilocode's equivalent?